CSS 430 Book Notes Chapter 4 - Threads and Concurrency

4.1 Overview

Chapter objectives

- Identify the basic components of a thread, and contrast threads and processes.

- Describe the major benefits and significant challenges of designing multithreaded processes.

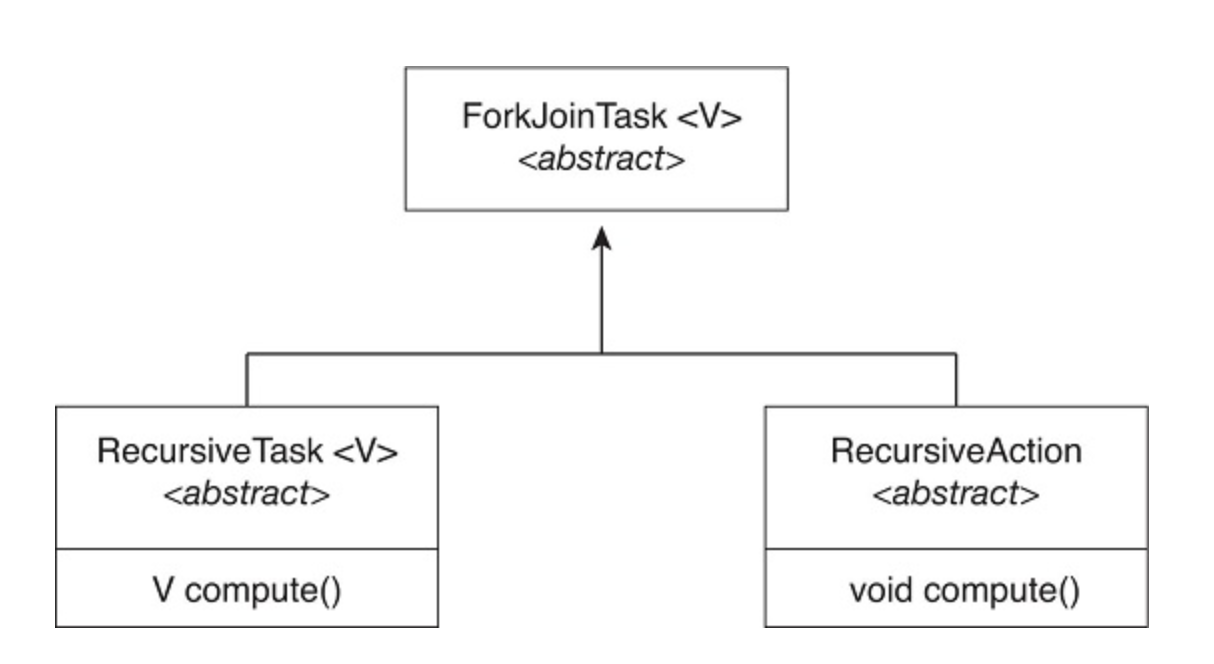

- Illustrate different approaches to implicit threading, including thread pools, fork-join, and Grand Central Dispatch.

- Describe how the Windows and Linux operating systems represent threads.

- Design multithreaded applications using the Pthreads, Java, and Windows threading APIs.

Overview

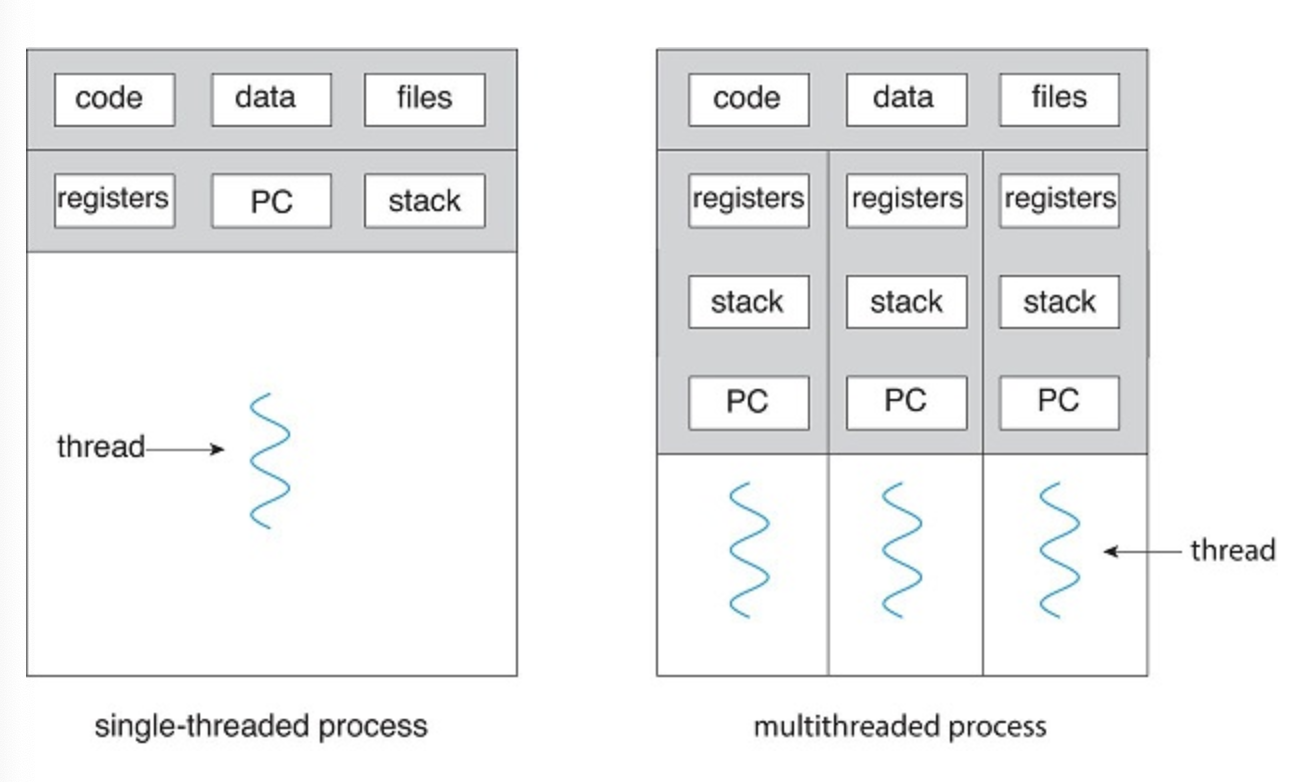

A thread is a basic unit of CPU utilization; it comprises a thread ID, a program counter (PC), a register set, and a stack.

A traditional process has a single thread of control. If a process has multiple threads of control, it can perform more than one task at a time.

Motivation

An application is typically implemented as a separate process with multiple threads of control.

- An application that creates photo thumbnails from a collection of images may use a separate thread to generate a thumbnail from each separate image.

- A web browser might have one thread display images or text while another thread retrieves data from the network.

- A word processor may have a thread for displaying graphics, another thread for responding to keystrokes from the user, and a third thread for performing spelling and grammar checking in the background.

Additionally, some applications may leverage multiple cores for processing

In general, it's more efficient to use one process that contains multiple threads.

Most OS kernels are also multithreaded with each thread performing a specific task like devices, memory management, or interrupt handling.

ps -ef will display kernel threads on a linux system

Benefits

There are four major categories when talking about threading benefits

1. Responsiveness

Multi threading allows an application to continue even if part of it is blocked or occupied., UI's can take advantage of this to manipulate the UI while a lengthy process runs in the background

2. Resource Sharing

Threads can share resources more easily since they belong to the same parent memory block

3. Economy

Since threads share the same process resources, there is less overhead for context switching

4. Scalability

You can scale greater on multiprocessor architecture.

4.2 Multicore Programming

Multithreaded programming provides a mechanism for more efficient use of multiple computing cores and improved concurrency

on a system with a single core, the execution threads will be "interleaved" over time, kinda shuffled together, as the core is only able to execute one thread at a time.

With multiple cores, concurrency means that some threads can run in parallel because the system can assign a separate thread to each core.

Concurrent systems allow multiple tasks to make progress...if say you have two tasks with 4 steps each, it might do task 1 step 2 followed by task 2 step 2 and so on

Parallel systems allow the processes to run simultaneously

This means you can have concurrency without parallelism

Programming Challenges

There are generally five areas that present challenges in programming for multicore programming

- Identifying tasks: This involves examining applications to find areas that can be divided into separate, concurrent tasks.

- Balance: programmers must also ensure that the tasks perform equal work of equal value. Using a separate execution core to run a task may not be worth the cost.

- Data splitting: As applications are divided into separate tasks, data accessed and manipulated by the tasks must be divided to run on separate cores.

- Data dependency: The data accessed by the tasks must be examined for dependencies between two or more tasks.

- Testing and debugging: many different execution paths are possible. Testing and debugging such concurrent programs is inherently more difficult than testing and debugging single-threaded applications.

A formula for identifying potential performance gains

Amdahl's law Wiki

Types of parallelism

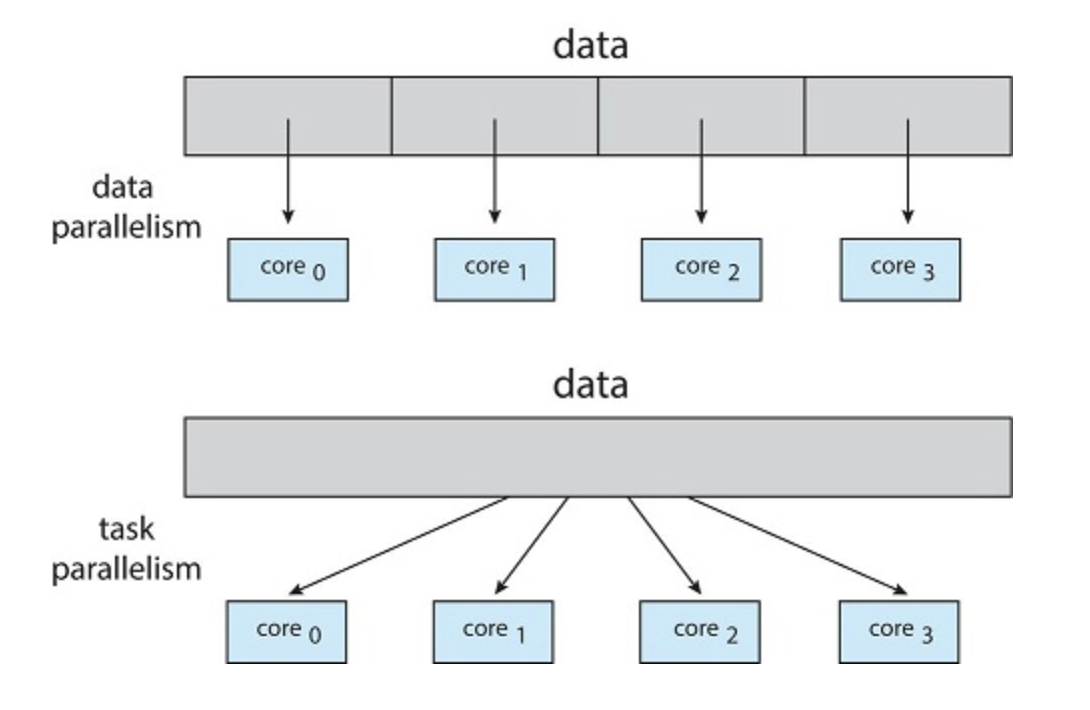

There are essentially two types of parallelism:

- Data parallelism: Distributing subsets of the same data across multiple cores and performing the same operation on each core. (think processing half of an array on one core and the second half on another)

- Task parallelism: each thread is performing a unique task on a different core. This could be using the same data or different data.

Data and Task Parallelism

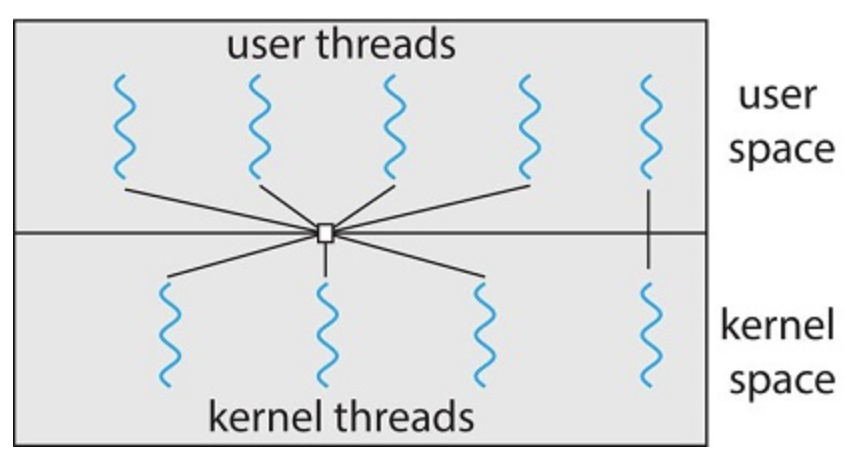

4.3 Multithreading Models

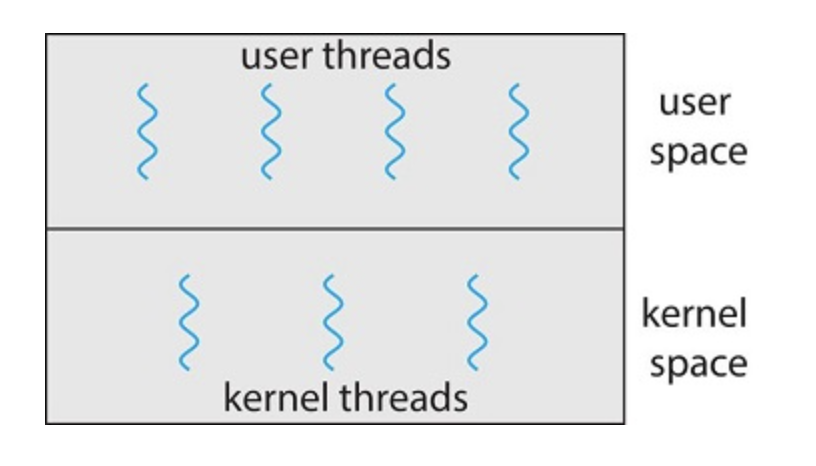

Thread support may be implemented at either the user level or the kernel level. User threads are managed without kernel support, kernal threads are supported and managed by the operating system. Almost all modern operating systems support kernel threads

User and Kernel Threads

Many-to-one Model

This maps many user level threads to one kernel level thread. It is implemented by a thread library in the user space. If a thread makes a blocking call, the whole process will be blocked. Additionally, since only one thread can access the kernel at any one time, multiple threads cannot run in parallel on multicore systems.

Many-to-one model

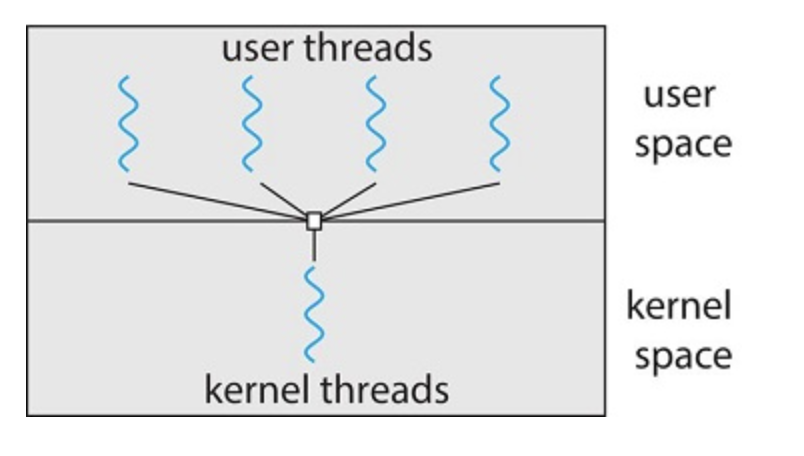

One-to-one Model

Maps each user thread onto a kernel thread

- multiple threads can run in parallel on multi-processors

- creating a user thread requires creating a corresponding kernel thread

One-to-one model

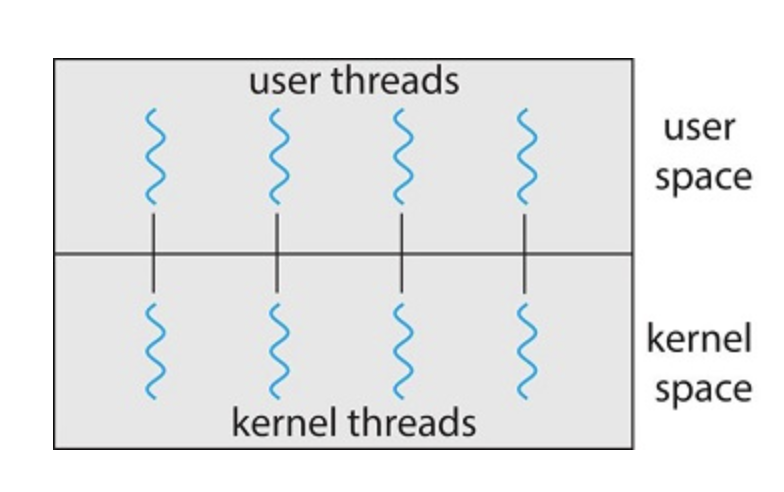

Many-to many Model

Multiplexes user threads to a smaller or equal number of kernel threads

Many-to-many model

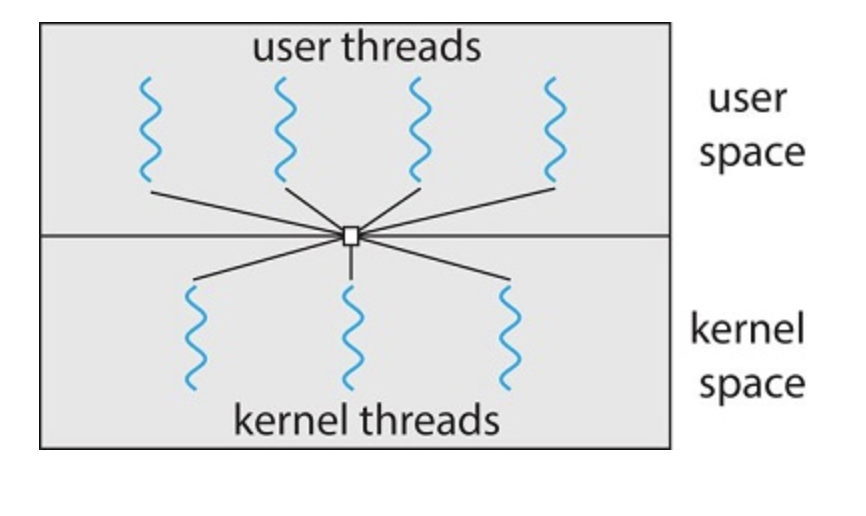

One variation of many to many is called the two-leveled model. You can bind a thread to a kernel thread while the rest can multiplex

two-level model

4.4 Thread Libraries

A thread library is an api ofr creating and managing threads

- Asynchronous threading: once a parent creates a child thread, the parent resumes its execution and runs concurrently and independently.

- Synchronous threading: the parent must wait for all of its children to terminate before it resumes

Pthreads

Refers to POSIX standard for api for thread creation and synchronization

it's a specification not an implementation

4.5 Implicit Threading

Transfers the creation of management of threading from application developers to compilers and runtime libraries

This requires application devs to identify tasks that can run in parallel

Thread Pools

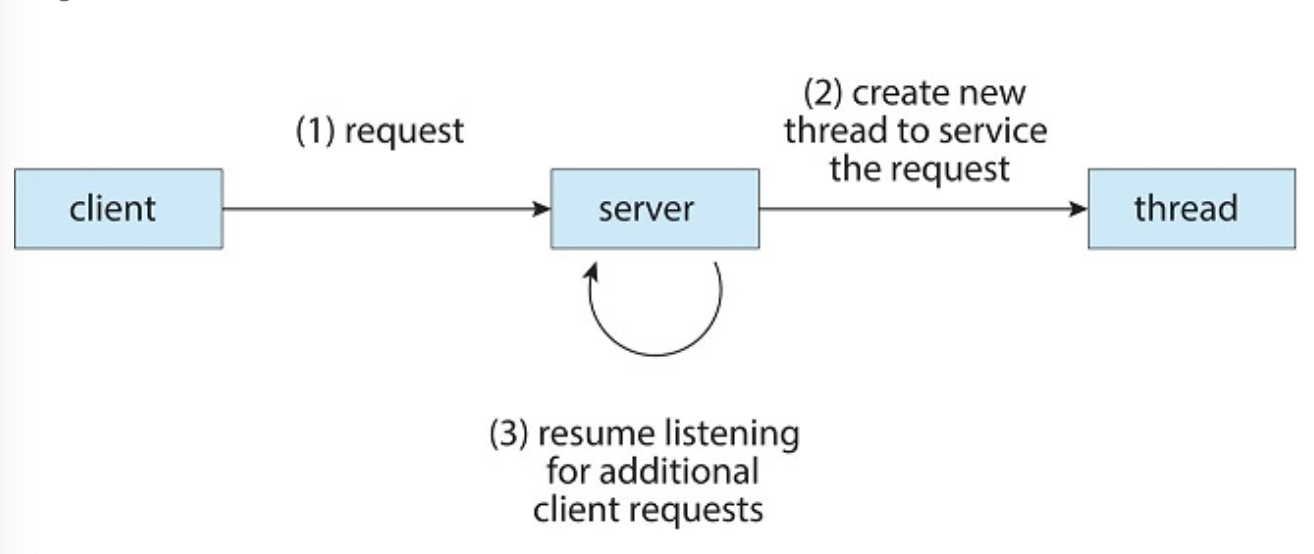

Create a number of threads at startup and place them into a pool that waits for work. The server submits the request to the thread pool. If there is an available thread, it's awakened and the request is serviced. If no available thread, the task is queued.

Thread Pool Benefits

- Servicing a request with an existing thread is often faster than waiting to create a thread.

- A thread pool limits the number of threads that exist at any one point. This is particularly important on systems that cannot support a large number of concurrent threads.

- Separating the task to be performed from the mechanics of creating the task allows us to use different strategies for running the task. For example, the task could be scheduled to execute after a time delay or to execute periodically.

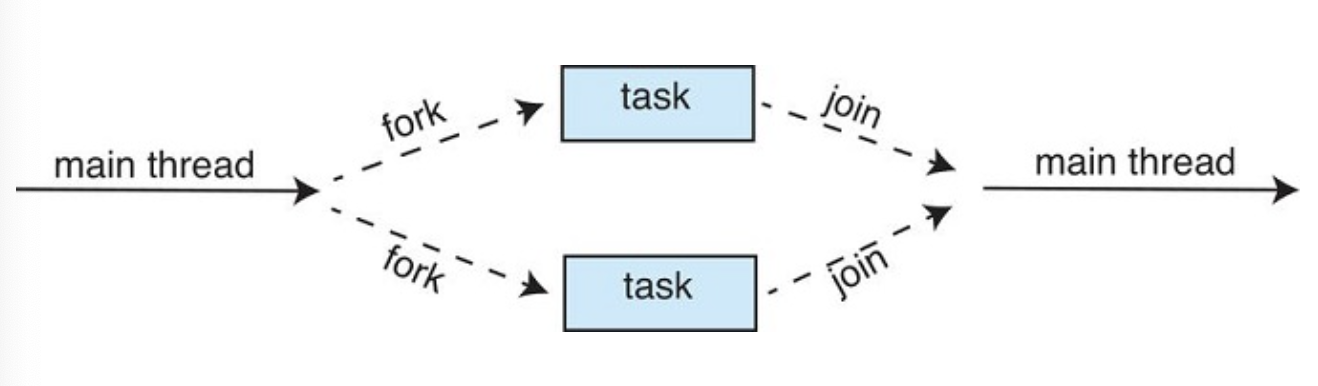

Fork Join

OpenMP

Identifies parallel regions as blocks that may run in parallel.

Grand central dispatch

schedules tasks for run-time execution by placing them in a dispatch queue, there are two queues, serial and concurrent.

4.6 Threading issues

If one thread in a program calls fork(), does the new process duplicate all threads, or is the new process single-threaded?

Some unix systems have two versions of fork. One duplicates all threads, and some duplicates only the thread invoked for the fork() system call.

Signal Handling

A signal is used to notify a process that an event has occured. It may be synchronous or asynchronous

Signals follow this pattern:

- Signal is generated by an event

- the signal is delivered to a process

- once delivered, the signal must be handled

A signal may be handled by one of two possible handlers

- a default handler

- a user defined handler

Where should the signal be delivered?

- Deliver the signal to the thread to which the signal applies.

- Deliver the signal to every thread in the process.

- Deliver the signal to certain threads in the process.

- Assign a specific thread to receive all signals for the process.

Thread Cancellation

Involves terminating a thread before it has completed

Target Thread: the thread to be cancelled

- Asynchronous Cancellation: One thread immediately terminates the target thread.

- Deferred Cancellation: The target thread periodically checks whether it should terminate, allowing it an opportunity to terminate itself in an orderly fashion.

Thread Local Storage

Each thread may need its own copy of certain data

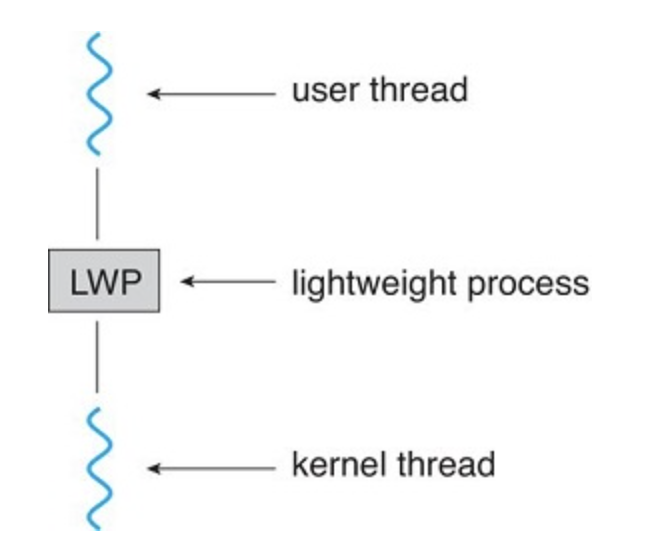

Scheduler activations

We may require communication between kernel and thread library. This communication allows kernel threads to be dynamically adjusted

Lightweight Process: an intermediate data structure between user and kernel threads

Scheduler activation: a communication scheme between user-thread library and kernel. Kernel provides an application with virtual processors and the application can schedule threads onto available virtual processors.

4.7 Operating system examples

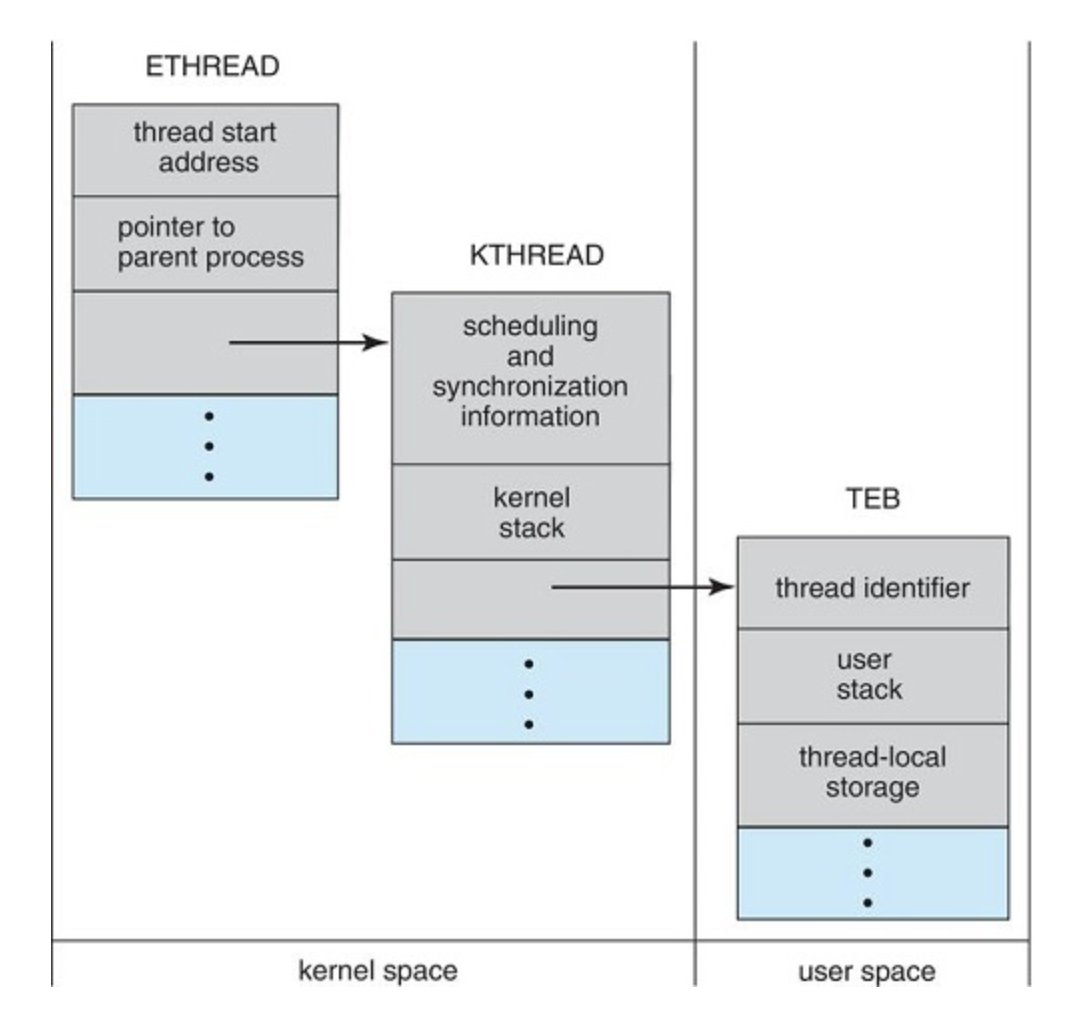

Windows thread

Data structures of a windows thread

Linux Threads

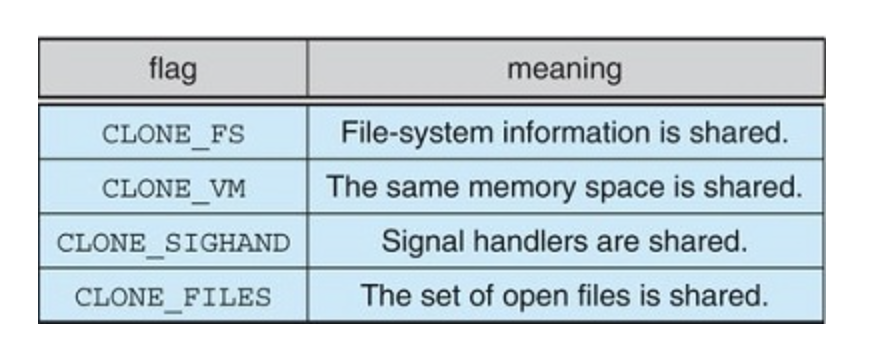

some flags passed when clone() is invoked