CSS 430 Book Notes Chapter 7 - Synchronization Examples

7.1 Classic problems of synchronization

The bounded-buffer problem

We assume that the pool consists of n buffers, each capable of holding one item. The mutex binary semaphore provides mutual exclusion for accesses to the buffer pool and is initialized to the value 1. The empty and full semaphores count the number of empty and full buffers. The semaphore empty is initialized to the value n; the semaphore full is initialized to the value 0.

Producer code

while (true) {

. . .

/* produce an item in next_produced */

. . .

wait(empty);

wait(mutex);

. . .

/* add next_produced to the buffer */

. . .

signal(mutex);

signal(full);

}

Consumer Code

while (true) {

wait(full);

wait(mutex);

. . .

/* remove an item from buffer to next_consumed */

. . .

signal(mutex);

signal(empty);

. . .

/* consume the item in next_consumed */

. . .

}

The Reader-Writers Problem

In a database system, you may have process that want to read from the database (readers), and process that may want to update the database(writers). If two readers want to access the database, no problems occur, however, if a reader and a writer want to access at the same time, there will be issues.

Therefore, we ensure that writers have exclusive access while writing to the database

First Variation

No reader is kept waiting unless a writer has already obtained permission to use the shared object. (No reader should wait for other readers to finish simply because a writer is waiting)

In this variation, the reader processes share the following data structures

semaphore rw_mutex = 1;

semaphore mutex = 1;

int read_count = 0;

while (true) {

wait(mutex);

read_count++;

if (read_count == 1)

wait(rw_mutex);

signal(mutex);

. . .

/* reading is performed */

. . .

wait(mutex);

read_count--;

if (read_count == 0)

signal(rw_mutex);

signal(mutex);

}

In this binary semaphores, mutex and rw_mutex are initialized to 1, read_count is a counting semaphore initialized to 0. The semaphore rw_mutex is common to both the reader and writer process. mutex is used to ensure mutual exclusion when the variable read_count is updated (by the readers). The read_count variable keeps track of how many processes are currently reading the object. The semaphore rw_mutex functions as a mutex semaphore for the writer processes. It is also used by the first or last reader that enters or exits the critical section, however it is not used by readers that enter or exit while the other readers are in their critical sections.

The writers share the following data structure

while (true) {

wait(rw_mutex);

. . .

/* writing is performed */

. . .

signal(rw_mutex);

}

If a writer is in the critical section and n readers are waiting, then one reader is queued on rw_mutex and mutex. When a writer executes signal(rw_mutex), we may resume the execution of either the waiting readers or a single waiting writer.

Second Variation

One a writer is ready, the writer performs its write as soon as possible. Ie, if a writer is waiting, no new readers may start reading.

Either solution may cause starvation. In the first variation, the writers may starve, in the second, readers may starve.

Reader-writer locks are most useful in the following situations:

-

In applications where it is easy to identify which processes only read shared data and which processes only write shared data.

-

In applications that have more readers than writers. This is because reader-writer locks generally require more overhead to establish than semaphores or mutual-exclusion locks. The increased concurrency of allowing multiple readers compensates for the overhead involved in setting up the reader-writer lock.

The Dining-Philosophers Problem

Consider five philosophers who spend their lives thinking and eating. The philosophers share a circular table surrounded by five chairs, each belonging to one philosopher. In the center of the table is a bowl of rice, and the table is laid with five single chopsticks (animation below). When a philosopher thinks, she does not interact with her colleagues. From time to time, a philosopher gets hungry and tries to pick up the two chopsticks that are closest to her (the chopsticks that are between her and her left and right neighbors). A philosopher may pick up only one chopstick at a time. Obviously, she cannot pick up a chopstick that is already in the hand of a neighbor. When a hungry philosopher has both her chopsticks at the same time, she eats without releasing the chopsticks. When she is finished eating, she puts down both chopsticks and starts thinking again.

The dining-philosophers problem is considered a classic synchronization problem neither because of its practical importance nor because computer scientists dislike philosophers but because it is an example of a large class of concurrency-control problems. It is a simple representation of the need to allocate several resources among several processes in a deadlock-free and starvation-free manner.

Semaphore Solution

One solution is to represent each chopstick with a semaphore. A philosopher tries to grab a chopstick by executing wait

A philosopher tries to grab a chopstick by executing wait() on that semaphore. She releases her chopstick by executing signal() on the appropriate semaphores, thus the shared data are semaphore chopstick[5] where all the elements of chopsticks are initialized to 1.

Structure of philosopher i

while (true) {

wait(chopstick[i]);

wait(chopstick[(i+1) % 5]);

. . .

/* eat for a while */

. . .

signal(chopstick[i]);

signal(chopstick[(i+1) % 5]);

. . .

/* think for awhile */

. . .

}

This solution guarantees no two neighbors are eating simultaneously, however it can create a deadlock. If all five philosophers become hungry at the same time and each grabs her left chopstick, all elements of chopstick will now be 0, each philosopher will not be able to grab a right chopstick as they are all taken.

Some remedies to the deadlock problem:

- Allow at most four philosophers to be sitting simultaneously at the table.

- Allow a philosopher to pick up her chopsticks only if both chopsticks are available (to do this, she must pick them up in a critical section).

- Use an asymmetric solution—that is, an odd-numbered philosopher picks up first her left chopstick and then her right chopstick, whereas an even-numbered philosopher picks up her right chopstick and then her left chopstick.

Monitor Solution

The monitor solution is a deadlock-free solution to the dining philosophers problem. To do this, we need three states that we may find the philosopher in. We will use the following data structure to accomplish this

enum {THINKING, HUNGRY, EATING} state[5];

Philosopher state[i]= EATING only if her two neighbors are not eating: state[(i+4)%5 != EATING) and state{(i+1) % 5] != EATING)

We also need to declare

condition self[5]

This allows philosopher i to delay when she is hungry, but is unable to obtain the chopsticks she needs

The distribution of the chopsticks is controlled by the monitor DiningPhilosophers

Each philosopher, before starting to eat, must invoke the pickup() operation. This may result in the suspension of the philosopher process. After a successful pickup operation, the philosopher may "eat". Following this process, the philosopher invokes the putdown() operation. The sequence looks as follows:

DiningPhilosophers.pickup(i);

...

eat

...

DiningPhilosophers.putdown(i);

Monitor solution to dining-philosopher problem

monitor DiningPhilosophers

{

enum {THINKING, HUNGRY, EATING} state[5];

condition self[5];

void pickup(int i) {

state[i] = HUNGRY;

test(i);

if (state[i] != EATING)

self[i].wait();

}

void putdown(int i) {

state[i] = THINKING;

test((i + 4) % 5);

test((i + 1) % 5);

}

void test(int i) {

if ((state[(i + 4) % 5] != EATING) &&

(state[i] == HUNGRY) &&

(state[(i + 1) % 5] != EATING)) {

state[i] = EATING;

self[i].signal();

}

}

initialization_code() {

for (int i = 0; i < 5; i++)

state[i] = THINKING;

}

}

This solution prevents deadlocks, however, it doesn't prevent starvation.

7.2 Synchronization within the Kernel

Synchronization in Windows

When the Windows kernel accesses a global resource on a single-processor system, it temporarily masks interrupts for all interrupt handlers that may also access the global resource. On a multiprocessor system, Windows protects access to global resources using spinlocks, although the kernel uses spinlocks only to protect short code segments.

For thread synchronization outside the kernel, Windows provides dispatcher objects. Using a dispatcher object, threads synchronize according to several different mechanisms, including mutex locks, semaphores, events, and timers.

The system protects shared data by requiring a thread to gain ownership of a mutex to access the data and to release ownership when it is finished.

Events are similar to condition variables; that is, they may notify a waiting thread when a desired condition occurs. Finally, timers are used to notify one (or more than one) thread that a specified amount of time has expired.

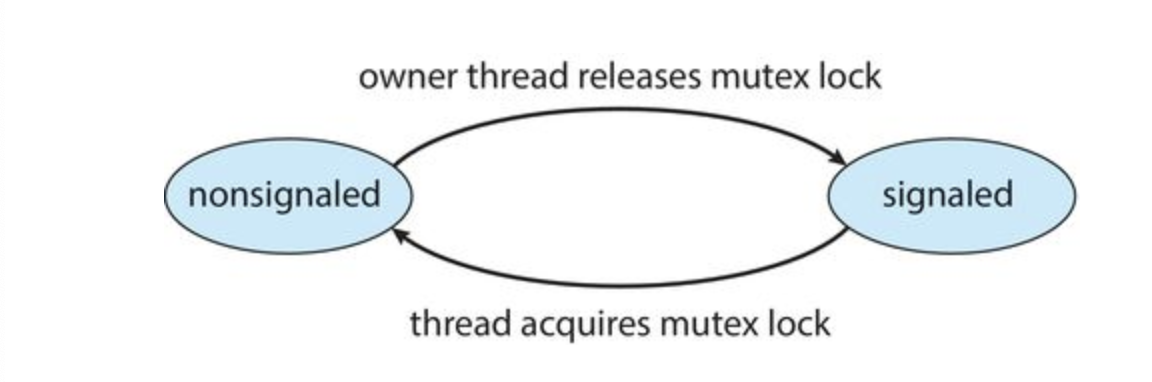

Dispatcher objects may be in either a signaled state or a nonsignaled state. An object in a signaled state is available, and a thread will not block when acquiring the object. An object in a nonsignaled state is not available, and a thread will block when attempting to acquire the object.

A critical-section object is a user-mode mutex that can often be acquired and released without kernel intervention. On a multiprocessor system, a critical-section object first uses a spinlock while waiting for the other thread to release the object. If it spins too long, the acquiring thread will then allocate a kernel mutex and yield its CPU.

Synchronization in linux

The simplest synchronization technique within the Linux kernel is an atomic integer, which is represented using the opaque data type atomic_t. As the name implies, all math operations using atomic integers are performed without interruption. To illustrate, consider a program that consists of an atomic integer counter and an integer value.

Mutex locks are available in Linux for protecting critical sections within the kernel. Here, a task must invoke the mutex_lock() function prior to entering a critical section and the mutex_unlock() function after exiting the critical section. If the mutex lock is unavailable, a task calling mutex_lock() is put into a sleep state and is awakened when the lock's owner invokes mutex_unlock().

Linux also provides spinlocks and semaphores (as well as reader-writer versions of these two locks) for locking in the kernel.

On single-processor machines, such as embedded systems with only a single processing core, spinlocks are inappropriate for use and are replaced by enabling and disabling kernel preemption. That is, on systems with a single processing core, rather than holding a spinlock, the kernel disables kernel preemption; and rather than releasing the spinlock, it enables kernel preemption. This is summarized below:

| Single Processor | Multiple Processors |

|---|---|

| Disable Kernel Preemption | Acquire Spin Lock |

| Enable Kernel Preemption | Release Spin lock |

In the Linux kernel, both spinlocks and mutex locks are nonrecursive, which means that if a thread has acquired one of these locks, it cannot acquire the same lock a second time without first releasing the lock. Otherwise, the second attempt at acquiring the lock will block.

Linux uses an interesting approach to disable and enable kernel preemption. It provides two simple system calls—preempt_disable() and preempt_enable()—for disabling and enabling kernel preemption. The kernel is not preemptible, however, if a task running in the kernel is holding a lock. To enforce this rule, each task in the system has a thread-info structure containing a counter, preempt_count, to indicate the number of locks being held by the task. When a lock is acquired, preempt_count is incremented. It is decremented when a lock is released. If the value of preempt_count for the task currently running in the kernel is greater than 0, it is not safe to preempt the kernel, as this task currently holds a lock. If the count is 0, the kernel can safely be interrupted (assuming there are no outstanding calls to preempt_disable()).

7.3 POSIX synchronization

POSIX mutex locks

Pthreads uses the pthread_mutex_t data type for mutex locks. A mutex is created with the pthread_mutex_init() function. The first parameter is a pointer to the mutex. By passing NULL as a second parameter, we initialize the mutex to its default attributes.

#include <pthread.h>

pthread_mutex_t mutex;

/* create and initialize the mutex lock */

pthread_mutex_init(&mutex,NULL);

The mutex is acquired and released with the pthread_mutex_lock() and pthread_mutex_unlock() functions. If the mutex lock is unavailable when pthread_mutex_lock() is invoked, the calling thread is blocked until the owner invokes pthread_mutex_unlock()

/* acquire the mutex lock */

pthread_mutex_lock(&mutex);

/* critical section */

/* release the mutex lock */

pthread_mutex_unlock(&mutex);

All mutex functions return a value of 0 with correct operation; if an error occurs, these functions return a nonzero error code.

POSIX Semaphores

POSIX specifies two types of semaphores—named and unnamed.

POSIX named semaphores

The function sem_open() is used to create and open a POSIX named sempahore:

#include <semaphore.h>

sem_t *sem;

/* Create the semaphore and initialize it to 1 */

sem = sem_open("SEM", O_CREAT, 0666, 1);

This creates a semaphore named SEM. The O_CREAT flag indicates that the semaphore will be created if it does not exist.

Additionally, the semaphore has read and write access for other processes (via the parameter 0666) and is initialized to 1.

The advantage of named semaphores is that multiple unrelated processes can easily use a common semaphore as a synchronization mechanism by simply referring to the semaphore's name. In the example above, once the semaphore SEM has been created, subsequent calls to sem_open() (with the same parameters) by other processes return a descriptor to the existing semaphore.

POSIX declares these operations sem_wait() and sem_post() (as opposed to wait() and signal())

Example of named semaphores with Pthreads

/* acquire the semaphore */

sem_wait(sem);

/* critical section */

/* release the semaphore */

sem_post(sem);

POSIX unnamed semaphores

An unnamed semaphore is created and initialized using the sem_init() function, which is passed three parameters:

- A pointer to the semaphore

- A flag indicating the level of sharing

- The semaphore's initial value

#include <semaphore.h>

sem_t sem;

/* Create the semaphore and initialize it to 1 */

sem_init(&sem, 0, 1);

By passing the flag 0, we are indicating that this semaphore can be shared only by threads belonging to the process that created the semaphore. We also initialize the semaphore value to 1.

POSIX unnamed semaphores use the same sem_wait() and sem_post() operations as named semaphores.

/* acquire the semaphore */

sem_wait(&sem);

/* critical section */

/* release the semaphore */

sem_post(&sem);

POSIX Condition Variables

Condition variables are used within the context of a monitor, which provides a locking mechanism to ensure data integrity. Since Pthreads is typically used in C programs—and since C does not have a monitor—we accomplish locking by associating a condition variable with a mutex lock.

Condition variables in Pthreads use the pthread_cond_t data type and are initialized using the pthread_cond_init() function.

pthread_mutex_t mutex;

pthread_cond_t cond_var;

pthread_mutex_init(&mutex,NULL);

pthread_cond_init(&cond_var,NULL);

The pthread_cond_wait() function is used for waiting on a condition variable. The following code illustrates how a thread can wait for the condition a == b to become true using a Pthread condition variable:

pthread_mutex_lock(&mutex);

while (a != b)

pthread_cond_wait(&cond_var, &mutex);

pthread_mutex_unlock(&mutex);

The mutex lock associated with the condition variable must be locked before the pthread_cond_wait() function is called, since it is used to protect the data in the conditional clause from a possible race condition.

Once this lock is acquired, the thread can check the condition. If the condition is not true, the thread then invokes pthread_cond_wait(), passing the mutex lock and the condition variable as parameters.

Calling pthread_cond_wait() releases the mutex lock, thereby allowing another thread to access the shared data and possibly update its value so that the condition clause evaluates to true.

A thread that modifies the shared data can invoke the pthread_cond_signal() function, thereby signaling one thread waiting on the condition variable.

pthread_mutex_lock(&mutex);

a = b;

pthread_cond_signal(&cond_var);

pthread_mutex_unlock(&mutex);

pthread_cond_signal() does not release the mutex lock. It is the subsequent call to pthread_mutex_unlock() that releases the mutex. Once the mutex lock is released, the signaled thread becomes the owner of the mutex lock and returns control from the call to pthread_cond_wait().

7.4 Synchronization in Java

Java monitors

Java provides a monitor-like concurrency mechanism for thread synchronization.

The below example implements a solution to the bounded-buffer problem wherein the producer and consumer invoke the insert() and remove() methods, respectively.

public class BoundedBuffer<E>

{

private static final int BUFFER_SIZE = 5;

private int count, in, out;

private E[] buffer;

public BoundedBuffer() {

count = 0;

in = 0;

out = 0;

buffer = (E[]) new Object[BUFFER_SIZE];

}

/* Producers call this method */

public synchronized void insert(E item) {

/* See Figure 7.4.3 */

}

/* Consumers call this method */

public synchronized E remove() {

/* See Figure 7.4.3 */

}

}

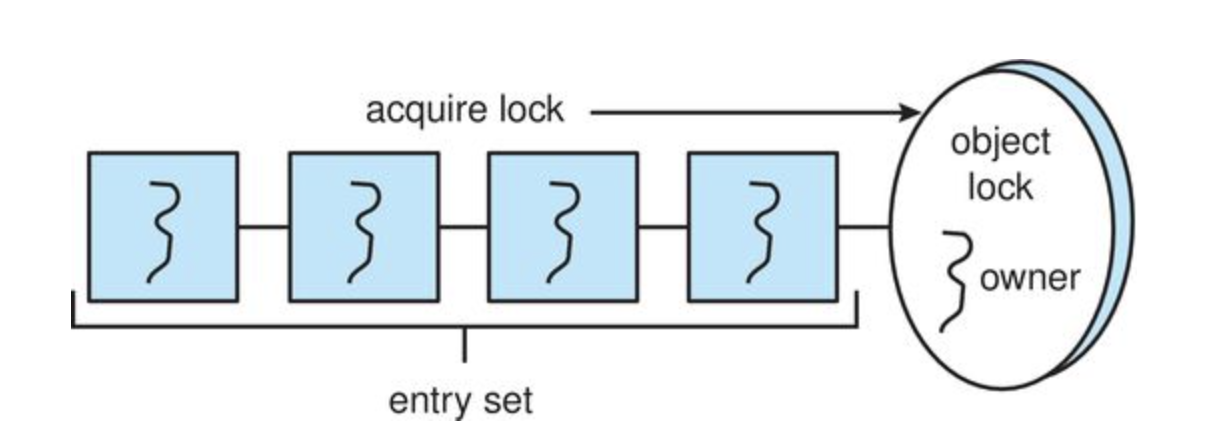

Every object in Java has associated with it a single lock. When a method is declared to be synchronized, calling the method requires owning the lock for the object. We declare a synchronized method by placing the synchronized keyword in the method definition, such as with the insert() and remove() methods in the BoundedBuffer class.

Invoking a synchronized method requires owning the lock on an object instance of BoundedBuffer.

If the lock is already owned by another thread, the thread calling the synchronized method blocks and is placed in the entry set for the object's lock.

If the lock is available when a synchronized method is called, the calling thread becomes the owner of the object's lock and can enter the method. The lock is released when the thread exits the method.

If the entry set for the lock is not empty when the lock is released, the JVM arbitrarily selects a thread from this set to be the owner of the lock

Entry set for a lock

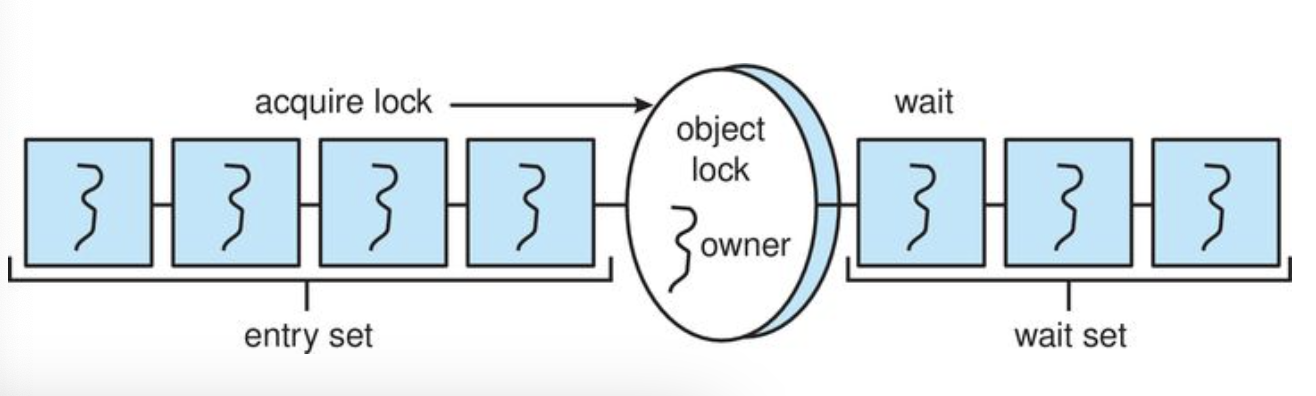

Every object also has associated with it a wait set consisting of a set of threads.

This wait set is initially empty. When a thread enters a synchronized method, it owns the lock for the object. However, this thread may determine that it is unable to continue because a certain condition has not been met.

public void someMethod() {

/* non-critical section */

synchronized(this) {

/* critical section */

}

/* remainder section */

}

When a thread calls the wait() method, the following happens:

- The thread releases the lock for the object.

- The state of the thread is set to blocked.

- The thread is placed in the wait set for the object.

Insert(), and remove() methods using wait() and notify()

/* Producers call this method */

public synchronized void insert(E item) {

while (count == BUFFER_SIZE) {

try {

wait();

}

catch (InterruptedException ie) { }

}

buffer[in] = item;

in = (in + 1) % BUFFER_SIZE;

count++;

notify();

}

/* Consumers call this method */

public synchronized E remove() {

E item;

while (count == 0) {

try {

wait();

}

catch (InterruptedException ie) { }

}

item = buffer[out];

out = (out + 1) % BUFFER_SIZE;

count--;

notify();

return item;

}

Ordinarily, when a thread exits a synchronized method, the departing thread releases only the lock associated with the object, possibly removing a thread from the entry set and giving it ownership of the lock. However, at the end of the insert() and remove() methods, we have a call to the method notify(). The call to notify():

- Picks an arbitrary thread

Tfrom the list of threads in the wait set - Moves

Tfrom the wait set to the entry set - Sets the state of

Tfrom blocked to runnable

T is now eligible to compete for the lock with the other threads. Once T has regained control of the lock, it returns from calling wait(), where it may check the value of count again.

-

The producer calls the

insert()method, sees that the lock is available, and enters the method. Once in the method, the producer determines that the buffer is full and callswait(). The call towait()releases the lock for the object, sets the state of the producer to blocked, and puts the producer in the wait set for the object. -

The consumer ultimately calls and enters the

remove()method, as the lock for the object is now available. The consumer removes an item from the buffer and callsnotify(). Note that the consumer still owns the lock for the object. -

The call to

notify()removes the producer from the wait set for the object, moves the producer to the entry set, and sets the producer's state to runnable. -

The consumer exits the

remove()method. Exiting this method releases the lock for the object. -

The producer tries to reacquire the lock and is successful. It resumes execution from the call to

wait(). The producer tests thewhileloop, determines that room is available in the buffer, and proceeds with the remainder of theinsert()method. If no thread is in the wait set for the object, the call tonotify()is ignored. When the producer exits the method, it releases the lock for the object.

Reentrant Locks

a ReentrantLock acts like the synchronized statement described in Section Java monitors: a ReentrantLock is owned by a single thread and is used to provide mutually exclusive access to a shared resource. However, the ReentrantLock provides several additional features, such as setting a fairness parameter, which favors granting the lock to the longest-waiting thread.

A thread acquires a ReentrantLock lock by invoking its lock() method. If the lock is available—or if the thread invoking lock() already owns it, which is why it is termed reentrant—lock() assigns the invoking thread lock ownership and returns control. If the lock is unavailable, the invoking thread blocks until it is ultimately assigned the lock when its owner invokes unlock(). ReentrantLock implements the Lock interface

Lock key = new ReentrantLock();

key.lock();

try {

/* critical section */

}

finally {

key.unlock();

}

If the lock is acquired via the lock() method, it is important that the lock be similarly released. By enclosing unlock() in a finally clause, we ensure that the lock is released once the critical section completes or if an exception occurs within the try block.

Semaphores

Semaphore sem = new Semaphore(1);

try {

sem.acquire();

/* critical section */

}

catch (InterruptedException ie) { }

finally {

sem.release();

}

Condition variables

We illustrate with the following example: Suppose we have five threads, numbered 0 through 4, and a shared variable turn indicating which thread's turn it is. When a thread wishes to do work, it calls the doWork() method in the following code, passing its thread number. Only the thread whose value of threadNumber matches the value of turn can proceed; other threads must wait their turn.

/* threadNumber is the thread that wishes to do some work */

public void doWork(int threadNumber)

{

lock.lock();

try {

/**

* If it's not my turn, then wait

* until I'm signaled.

*/

if (threadNumber != turn)

condVars[threadNumber].await();

/**

* Do some work for awhile ...

*/

/**

* Now signal to the next thread.

*/

turn = (turn + 1) % 5;

condVars[turn].signal();

}

catch (InterruptedException ie) { }

finally {

lock.unlock();

}

}

We also must create a ReentrantLock and five condition variables (representing the conditions the threads are waiting for) to signal the thread whose turn is next. This is shown below:

Lock lock = new ReentrantLock();

Condition[] condVars = new Condition[5];

for (int i = 0; i < 5; i++)

condVars[i] = lock.newCondition();

7.5 Alternative approaches

Transactional Memory

A memory transaction is a sequence of memory read-write operations that are atomic. If all operations in a transaction are completed, the memory transaction is committed. Otherwise, the operations must be aborted and rolled back.

As an alternative to traditional locking methods, new features that take advantage of transactional memory can be added to a programming language. In our example, suppose we add the construct atomic{S}, which ensures that the operations in S execute as a transaction. This allows us to rewrite the update() function as follows:

void update ()

{

atomic {

/* modify shared data */

}

}

The advantage of using such a mechanism rather than locks is that the transactional memory system—not the developer—is responsible for guaranteeing atomicity.

Additionally, because no locks are involved, deadlock is not possible.

OpenMP

Any code following the compiler directive #pragma omp parallel is identified as a parallel region and is performed by a number of threads equal to the number of processing cores in the system.

Along with its #pragma omp parallel compiler directive, OpenMP provides the compiler directive #pragma omp critical, which specifies the code region following the directive as a critical section in which only one thread may be active at a time. In this way, OpenMP provides support for ensuring that threads do not generate race conditions.

void update(int value)

{

#pragma omp critical

{

counter += value;

}

}