CSS 430 Book Notes Chapter 8 Deadlocks

8.1 System Model

When a waiting thread can never change state because a resource it requested is held by other waiting threads, the situation is called a deadlock.

deadlock: The state in which two processes or threads are stuck waiting for an event that can only be caused by one of the processes or threads.

A system consists of a finite number of resources to be distributed among a number of competing threads.

The resources may be partitioned into several types, for instance, if a system has four CPUs, then the resource type CPU has four instances.

If a thread requests an instance of a resource type, the allocation of any instance of the type should satisfy the request. If it does not, then the instances are not identical, and the resource type classes have not been defined properly.

A thread must request a resource before using it and must release the resource after using it. A thread may request as many resources as it requires to carry out its designated task. Obviously, the number of resources requested may not exceed the total number of resources available in the system. In other words, a thread cannot request two network interfaces if the system has only one.

Under the normal mode of operation, a thread may utilize a resource in only the following sequence:

- Request. The thread requests the resource. If the request cannot be granted immediately (for example, if a mutex lock is currently held by another thread), then the requesting thread must wait until it can acquire the resource.

- Use. The thread can operate on the resource (for example, if the resource is a mutex lock, the thread can access its critical section).

- Release. The thread releases the resource.

Developers of multithreaded applications must remain aware of the possibility of deadlocks. The locking tools presented in the chapter Synchronization Tools are designed to avoid race conditions. However, in using these tools, developers must pay careful attention to how locks are acquired and released. Otherwise, deadlock can occur, as described next.

8.2 Deadlock in multithreaded applications

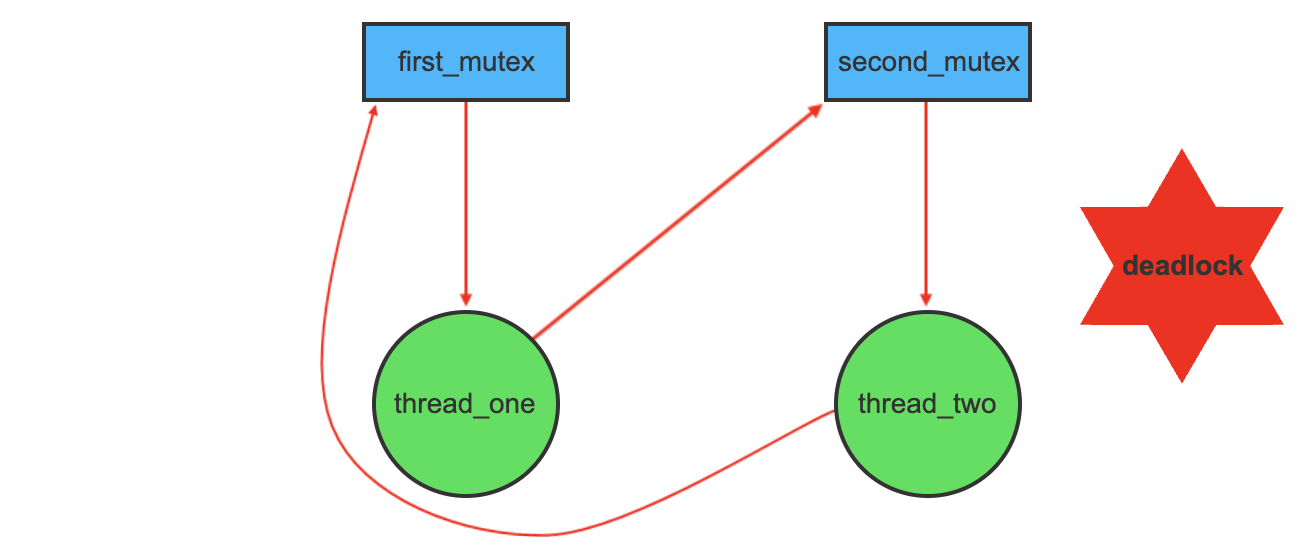

Here we illustrate an example of deadlock using Pthreads

Two mutex locks are initialized

pthread_mutex_t first_mutex;

pthread_mutex_t second_mutex;

pthread_mutex_init(&first_mutex,NULL);

pthread_mutex_init(&second_mutex,NULL);

Next, two threads, thread_one and thread_two are created and both these have access to both mutex locks. thred_one and thread_two run in the functions do_work_one() and do_work_two()

/* thread_one runs in this function */

void *do_work_one(void *param)

{

pthread_mutex_lock(&first_mutex);

pthread_mutex_lock(&second_mutex);

/**

* Do some work

*/

pthread_mutex_unlock(&second_mutex);

pthread_mutex_unlock(&first_mutex);

pthread_exit(0);

}

/* thread_two runs in this function */

void *do_work_two(void *param)

{

pthread_mutex_lock(&second_mutex);

pthread_mutex_lock(&first_mutex);

/**

* Do some work

*/

pthread_mutex_unlock(&first_mutex);

pthread_mutex_unlock(&second_mutex);

pthread_exit(0);

}

Here, thread_one attempts to acquire first_mutex and then second_mutex while thread_two attempts to acquire second_mutex then first_mutex. If thread_one acquires first_mutex and thread_two acquires second mutex, deadlock can occur

Livelock

livelock is similar to deadlock in that it prevents two or more threads from proceeding. Livelock occurs when a thread continuously attempts an action that fails. (think people trying to pass where they keep moving to the same side.)

/* thread_one runs in this function */

void *do_work_one(void *param)

{

int done = 0;

while (!done) {

pthread_mutex_lock(&first_mutex);

if (pthread_mutex_trylock(&second_mutex)) {

/**

* Do some work

*/

pthread_mutex_unlock(&second_mutex);

pthread_mutex_unlock(&first_mutex);

done = 1;

}

else

pthread_mutex_unlock(&first_mutex);

}

pthread_exit(0);

}

/* thread_two runs in this function */

void *do_work_two(void *param)

{

int done = 0;

while (!done) {

pthread_mutex_lock(&second_mutex);

if (pthread_mutex_trylock(&first_mutex)) {

/**

* Do some work

*/

pthread_mutex_unlock(&first_mutex);

pthread_mutex_unlock(&second_mutex);

done = 1;

}

else

pthread_mutex_unlock(&second_mutex);

}

pthread_exit(0);

}

8.3 Deadlock Characterization

Necessary Conditions

A deadlock situation can arise if the following four conditions hold simultaneously in a system:

- Mutual Exclusion: At least one resource must be held in a nonsharable mode; that is, only one thread at a time can use the resource. If another thread requests that resource, the requesting thread must be delayed until the resource has been released.

- Hold and Wait: A thread must be holding at least one resource and waiting to acquire additional resources that are currently being held by other threads.

- No preemption: Resources cannot be preempted. IE, a resource can be released only voluntarily by the thread holding it after the thread completes its task

- Circular Wait: A set {

, ,... } of waiting threads must exist such that is waiting for a resource held by , is waiting for a resource held by , …, is waiting for a resource held by , and is waiting for a resource held by .

In order for dead lock to occur, all four of the above must be true.

Resource Allocation Graph

Deadlocks can be described more precisely in terms of a directed graph called a system resource-allocation graph.

This graph consists of a set of

A directed edge from thread

Generally we represent each thread

Since resource

- thread_one acquires first_mutex.

- thread_two acquires second_mutex.

- thread_one wants second_mutex, which could be freed up by thread_two.

- thread_two wants first_mutex, and as it is still holding second_mutex which thread_one is waiting for, there is a cycle in the graph: first_mutex → T1 → second_mutex → T2 → first_mutex.

When thread

Once a thread finishes with a resource, the assignment edge is deleted.

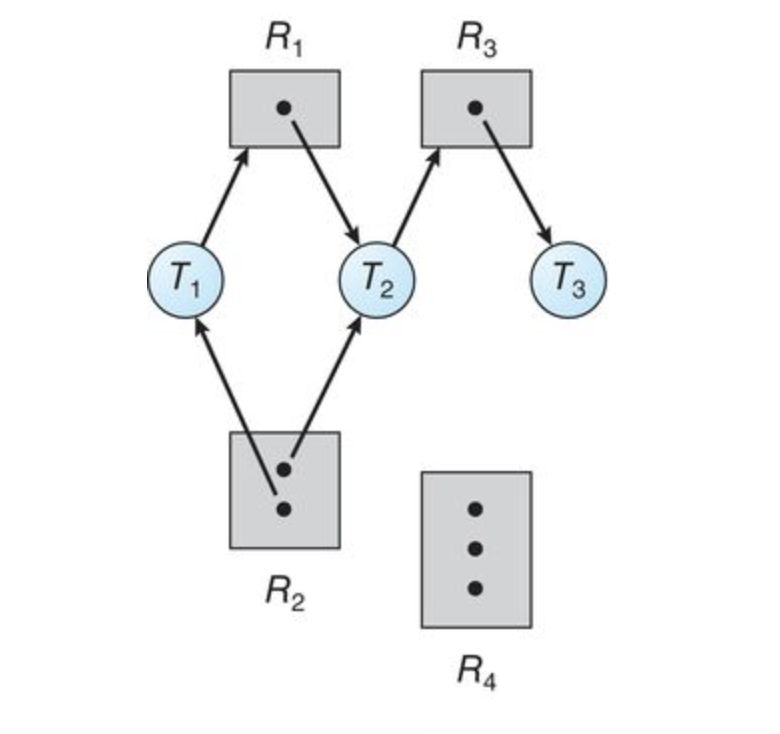

Example

The sets

Resource Instances:

- One instance of resource type

- Two instances of resource type

- One instance of resource type

- Three instances of resource type

Thread States:

- Thread

is holding an instance of resource type and is waiting for an instance of resource type . - Thread

is holding an instance of and an instance of and is waiting for an instance of . - Thread

is holding an instance of

if the graph contains no cycles, then no thread in the system is deadlocked. If the graph does contain a cycle, then a deadlock may exist.

If each resource type has exactly one instance, then a cycle implies that a deadlock has occurred. If the cycle involves only a set of resource types, each of which has only a single instance, then a deadlock has occurred. Each thread involved in the cycle is deadlocked. In this case, a cycle in the graph is both a necessary and a sufficient condition for the existence of deadlock.

If we take the above graph, and add an instance where

- T1 → R1 → T2 → R3 → T3 → R2 → T1

- T2 → R3 → T3 → R2 → T2

Threads

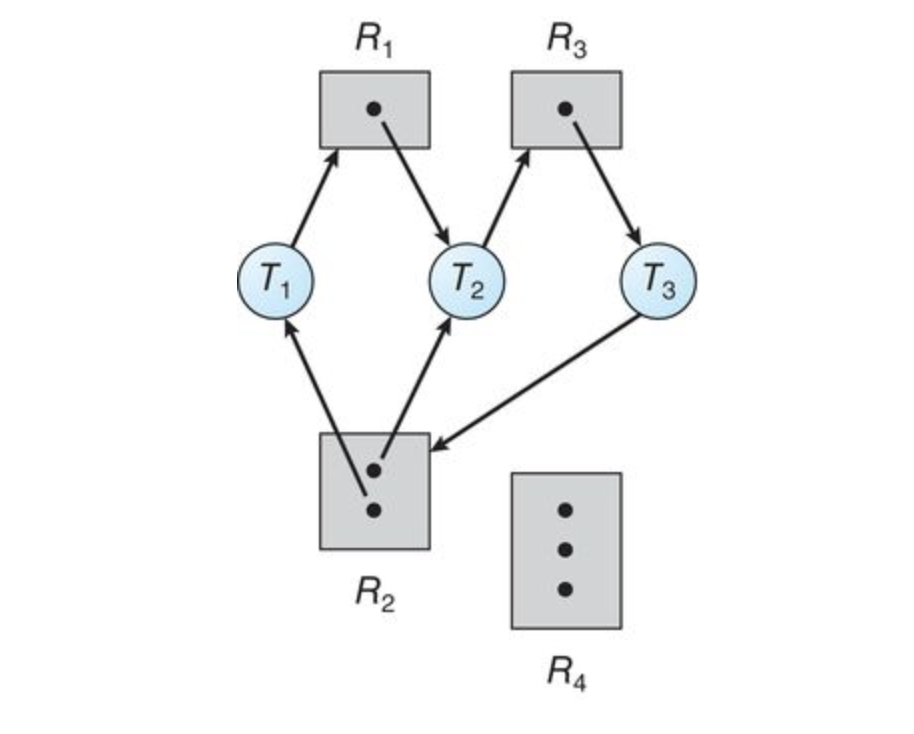

In the following however, there is no deadlock. There is a cycle, however

Methods for handling deadlocks

We can deal with deadlocks in one of three ways:

- We can ignore the problem altogether and pretend that deadlocks never occur in the system.

- We can use a protocol to prevent or avoid deadlocks, ensuring that the system will never enter a deadlocked state.

- We can allow the system to enter a deadlocked state, detect it, and recover.

Most operating systems (including Windows and Linux) use option 1.

Deadlock prevention provides a set of methods to ensure that at least one of the necessary conditions (Section Necessary conditions) cannot hold. These methods prevent deadlocks by constraining how requests for resources can be made.

Deadlock avoidance requires that the operating system be given additional information in advance concerning which resources a thread will request and use during its lifetime. With this additional knowledge, the operating system can decide for each request whether or not the thread should wait.

8.5 Deadlock Prevention

If we prevent one of the four necessary conditions for deadlocks(mutual exclusion, hold and wait, no preemption, and circular wait), we can prevent deadlock from occurring.

Mutual exclusion

In general, we cannot prevent deadlocks by denying the mutual-exclusion condition, because some resources are intrinsically nonsharable. For example, a mutex lock cannot be simultaneously shared by several threads.

Hold and Wait

To ensure that the hold-and-wait condition never occurs in the system, we must guarantee that, whenever a thread requests a resource, it does not hold any other resources.

One way we can do this is require that each thread be allocated all its resources before it begins execution, however this is difficult to implement

Another method is allowing a thread to request resources only when it has none. A thread can request some resources, uses them, but must release them before it requests more.

Both these protocols have two main disadvantages. First, resource utilization may be low, since resources may be allocated but unused for a long period. For example, a thread may be allocated a mutex lock for its entire execution, yet only require it for a short duration. Second, starvation is possible. A thread that needs several popular resources may have to wait indefinitely, because at least one of the resources that it needs is always allocated to some other thread.

No preemption

If a thread is holding some resources and requests another resource that cannot be immediately allocated to it (that is, the thread must wait), then all resources the thread is currently holding are preempted. In other words, these resources are implicitly released. The preempted resources are added to the list of resources for which the thread is waiting. The thread will be restarted only when it can regain its old resources, as well as the new ones that it is requesting.

Alternatively, if a thread requests some resources, we first check whether they are available. If they are, we allocate them. If they are not, we check whether they are allocated to some other thread that is waiting for additional resources. If so, we preempt the desired resources from the waiting thread and allocate them to the requesting thread. If the resources are neither available nor held by a waiting thread, the requesting thread must wait. While it is waiting, some of its resources may be preempted, but only if another thread requests them. A thread can be restarted only when it is allocated the new resources it is requesting and recovers any resources that were preempted while it was waiting.

Circular Wait

Consider the set

We can accomplish this scheme in an application program by developing an ordering among all synchronization objects in the system. For example, the lock ordering in the Pthread program shown below could be:

F(first_mutex)=1;

F(second_mutex)=1;

We now can use the following protocol to prevent a deadlock. Each thread can request resources only in an increasing order of enumeration. Or, a thread can initially request an instance of a resource, say

Using the function defined above, a thread which wants to use both first_mutex and second_mutex at the same time must first request first_mutex and then second_mutex.

We can also require thread requesting an instance of

If these two protocols are used, circular-wait cannot hold. This can be demonstrated by assuming that a circular wait exists. If the set of threads involved in the circular wait are

It is also important to note that imposing a lock ordering does not guarantee deadlock prevention if locks can be acquired dynamically. For example, assume we have a function that transfers funds between two accounts. To prevent a race condition, each account has an associated mutex lock that is obtained from a get_lock() function such as that shown below. Deadlock is possible if two threads simultaneously invoke the transaction() function, transposing different accounts. That is, one thread might invoke

Deadlock with lock ordering

void transaction(Account from, Account to, double amount)

{

mutex lock1, lock2;

lock1 = get_lock(from);

lock2 = get_lock(to);

acquire(lock1);

acquire(lock2);

withdraw(from, amount);

deposit(to, amount);

release(lock2);

release(lock1);

}

8.6 Deadlock Avoidance

An alternative method for preventing deadlocks is to require additional info about how resources are to be requested. For example, in a system with resources

With this knowledge of the complete sequence of requests and releases for each thread, the system can decide for each request whether or not the thread should wait in order to avoid a possible future deadlock. Each request requires that in making this decision the system consider the resources currently available, the resources currently allocated to each thread, and the future requests and releases of each thread.

The simplest and most useful model requires that each thread declare the maximum number of resources of each type that it may need. Given this, it's possible to create an algorithm that ensures that the system will never enter a deadlocked state.

Deadlock avoidance algorithms dynamically examines the resource allocation state to ensure that a circular-wait condition can never exist. The resource allocation state is defined by the number of available and allocated resources and the maximum demands of the threads

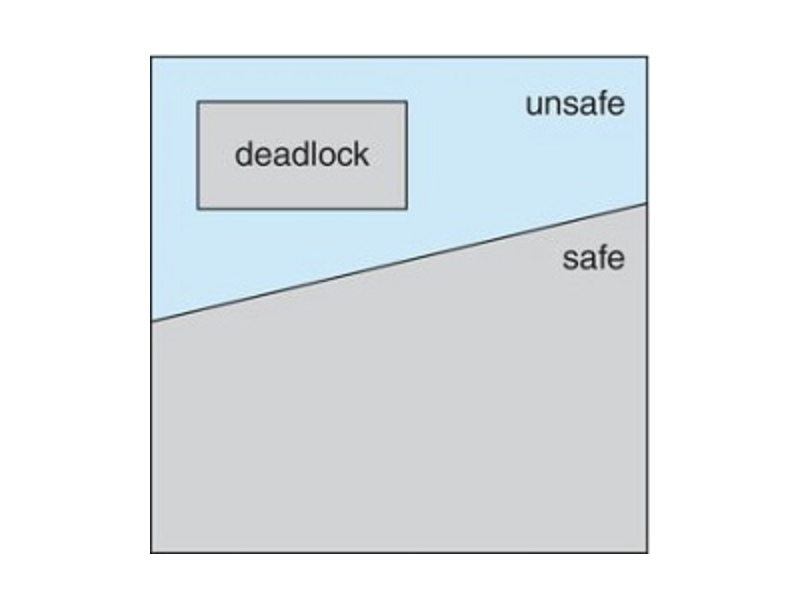

Safe State

A state is safe if the system can allocate resources to each thread (up to its maximum) in some order and still avoid a deadlock.

A system is in a safe state only if there exists a safe sequence. A sequence such as

An unsafe state may lead to a deadlock, but doesn't necessarily do so.

Safe, Unsafe, and Deadlocked State Spaces

For example, lets say you have 12 resources and three threads,

| Maximum Needs | Current Needs | |

|---|---|---|

| 10 | 5 | |

| 4 | 2 | |

| 9 | 2 |

at time

A system can go from safe to unsafe pretty easily. For instance, if at time

Additionally, If thread

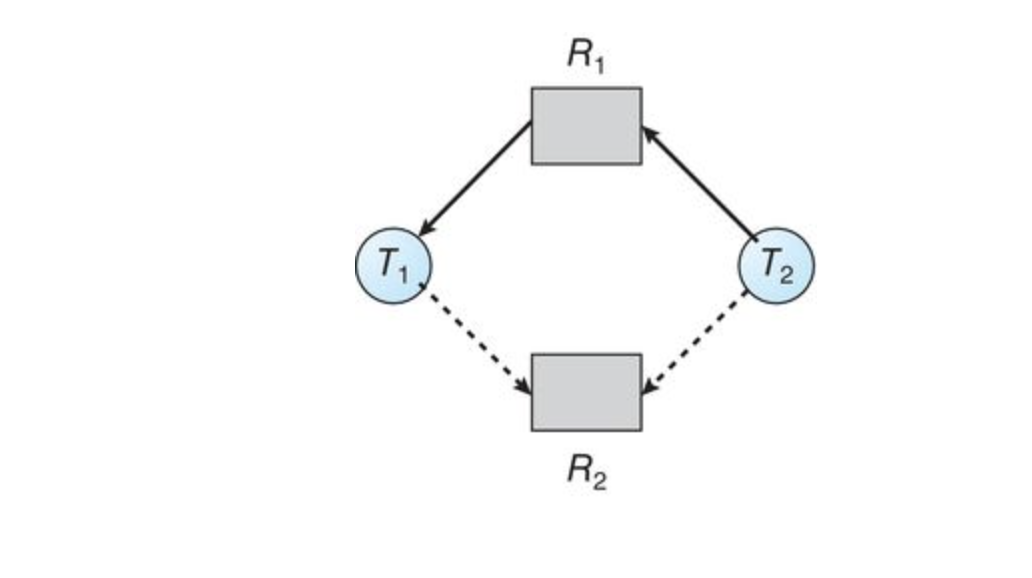

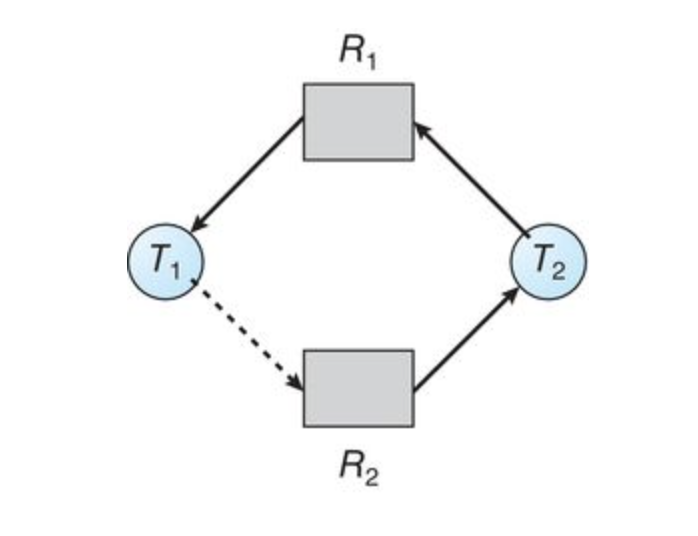

Resource-allocation-graph-algorithm.

If we have a resource-allocation system with only one instance of each resource type, we can use a variant of the resource-allocation graph for deadlock avoidance. In addition to the request and assignment edges already described, we can introduce a new type of edge, called a claim edge. A claim edge

The resources must be claimed a priori in the system, or before thread

Suppose that thread

If no cycle exists, the allocation of resources will leave the system in a safe state. If a cycle is found, the allocation will put the system in an unsafe state. in that case, thread

Example:

Suppose that

An unsafe state in the resource-allocation graph

Banker's Algorithm

The resource-allocation-graph algorithm is not applicable to a resource-allocation system with multiple instances of each resource type. The deadlock-avoidance algorithm that we describe next is applicable to such a system but is less efficient than the resource-allocation graph scheme. This algorithm is commonly known as the banker's algorithm.

When a new thread enters the system, it must declare the maximum number of instances of each resource type that it may need. This number may not exceed the total number of resources in the system. When a user requests a set of resources, the system must determine whether the allocation of these resources will leave the system in a safe state. If it will, the resources are allocated; otherwise, the thread must wait until some other thread releases enough resources.

To implement the banker's algorithm, we need to use several data structures. These data structures encode the state of the resource allocation system. In the following data structures,

- Available: A vector of length

indicates the number of available resources of each type. if Available[ ] equals , instances of resource type are available. - Max: A

matrix defines the maximum demand of each thread. If Max[ ][j] equals , thread may request at most instances of resource type - Allocation: An

matrix defines the number of resources of each type currently allocated to each thread. If Allocation[ ][j] equals , thread is currently allocated instances of resource type - Need: An

matrix indicates the remaining resources need of each thread. if Need[ ][j] equals , then thread may need more instances of resource type to complete it's task. Note that Need[ ][j] = Max[ ][j] - Allocation[ ][j]

To simplify the presentation of the banker's algorithm, we can establish some notation. Let

We can treat each row in the matrices Allocation and Need as vextors and refer to them as

Safety Algorithm

We can now present the algorithm for finding out whether or not a system is in a safe state. This algorithm can be described as follows:

-

Let Work and Finish be vectors of length

and , respectively. Initialize Work = Available and Finish[ ] = false for = 0, 1, …, − 1. -

Find an index

such that both -

Finish[

] == false -

≤ Work

If no suchexists, go to step 4.

-

-

Work = Work + Allocationi Finish[

] = true Go to step 2. -

If Finish[

] == true for all , then the system is in a safe state.

This algorithm may require an order of

Resource Request Algorithm

Next, we describe the algorithm for determining whether requests can be safely granted. Let

- If

go to step 2. Otherwise, raise an error condition since the thread has exceeded its maximum claim. - If

go to step 3, Otherwise must wait since the resources are not available. - Have the system pretend to have allocates the requested resources to thread

by modifying the state as follows

Available = Available-Requesti

Allocationi = Allocationi + Requesti

Need = Needi-Requesti

If the resulting resource allocation state is safe, the transaction is completed and the thread

Example:

To illustrate the use of the banker's algorithm, consider a system with five threads,

| Allocation | Max | Available | |

|---|---|---|---|

| ABC | ABC | ABC | |

| 010 | 753 | 332 | |

| 200 | 322 | ||

| 302 | 902 | ||

| 211 | 222 | ||

| 002 | 433 |

The content of the matrix Need is defined to be Max-Allocationand is as follows:

| Need | |

|---|---|

| ABC | |

| 743 | |

| 122 | |

| 600 | |

| 011 | |

| 431 |

We claim that the system is currently in a safe state. Indeed, the sequence

| Allocation | Need | Available | |

|---|---|---|---|

| ABC | ABC | ABC | |

| 010 | 743 | 230 | |

| 200 | 020 | ||

| 302 | 600 | ||

| 211 | 011 | ||

| 002 | 431 |

We must determine whether this new system state is safe. To do so, we execute our safety algorithm and find that the sequence

However, we can see, that when the system is in this state, a request for (3,3,0) by

8.7 Deadlock Detection

If a system does not employ either a deadlock-prevention or deadlock avoidance algorithm, then a deadlock situation may occur. To solve for this, the operating system may provide:

- An algorithm that examines the state of the system to determine whether a deadlock has occurred

- An algorithm to recover from the deadlock

Single instance of each resource type

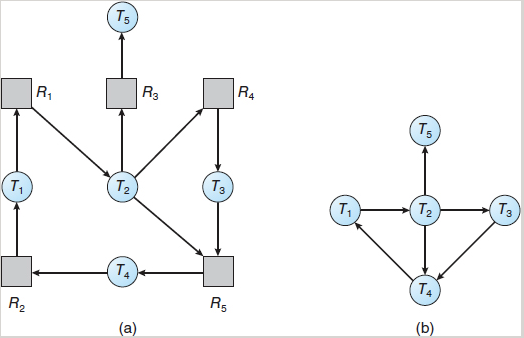

If all resources have only a single instance, we can define a deadlock detection algorithm that uses a variant of the resource allocation graph called a wait-for graph. We obtain this graph from the resource allocation graph by removing the resource nodes and collapsing the appropriate edges.

An edge from

Resource allocation graph(a) and corresponding wait-for graph(b)

A deadlock exists in the system if and only if the wait-for graph contains a cycle. To detect deadlocks, the system needs to maintain the wait-for graph and periodically invoke an algorithm that searches for a cycle in the graph. this is an

Several instances of a resource type

The wait-for graph scheme is not applicable to a resource-allocation system with multiple instances of each resource type, however we can use an algorithm similar to the banker's algorithm to deal with this

-

Available. A vector of length

indicates the number of available resources of each type. -

Allocation. An

matrix defines the number of resources of each type currently allocated to each thread. -

Request. An

matrix indicates the current request of each thread. If Request[j][ j] equals k, then thread is requesting more instances of resource type .

We treat the rows in the matrices Allocation and Request as vectors and refer to them asand . The detection algorithm investigates every possible allocation sequence for the threads that remain to be completed.

- Let Work and Finish be vectors of length

and respectively. Initialize . For if , otherwise - Find an index

such that both

if no suchexists, go to step 4

go to step 2 - If

for some , then the system is in a deadlocked state. If , then thread is deadlocked.

This algorithm requires

Recovery From Deadlock

There are two options for breaking a deadlock. One is simply to abort one or more threads to break the circular wait. The other is to preempt some resources from one or more of the deadlocked threads.

Process and thread termination

Elimination by abortion can use one of two methods. In both methods, the system reclaims all resources allocated to the terminated processes.

-

Abort all deadlocked processes. This method clearly will break the deadlock cycle, but at great expense. The deadlocked processes may have computed for a long time, and the results of these partial computations must be discarded and probably will have to be recomputed later.

-

Abort one process at a time until the deadlock cycle is eliminated. This method incurs considerable overhead, since after each process is aborted, a deadlock-detection algorithm must be invoked to determine whether any processes are still deadlocked.

Aborting a process may have repercussions. If the process was, say, updating a file, it may leave the file in an incorrect state. Additionally, if a process was holding a mutex, the system must restore the status of the mutex as being available.

If aborting one process at a time, the system must determine which process or processes should be terminated. Ideally, the system should abort the process whose termination will incur the minimum cost, unfortunatley many factors play into what may constitute minimum cost.

- What the priority of the process is

- How long the process has computed and how much longer the process will compute before completing its designated task

- How many and what types of resources the process has used (for example, whether the resources are simple to preempt)

- How many more resources the process needs in order to complete

- How many processes will need to be terminated

Resource Preemption

To eliminate deadlocks using resource preemption, we successively preempt some resources from processes and give these resources to other processes until the deadlock cycle is broken.

If preemption is required to deal with deadlocks, then three issues need to be addressed:

- Selecting a victim. Which resources and which processes are to be preempted? As in process termination, we must determine the order of preemption to minimize cost. Cost factors may include such parameters as the number of resources a deadlocked process is holding and the amount of time the process has thus far consumed.

- Rollback.

If we preempt a resource from a process, what should be done with that process? Clearly, it cannot continue with its normal execution; it is missing some needed resource. We must roll back the process to some safe state and restart it from that state.

Since, in general, it is difficult to determine what a safe state is, the simplest solution is a total rollback: abort the process and then restart it. Although it is more effective to roll back the process only as far as necessary to break the deadlock, this method requires the system to keep more information about the state of all running processes. - Starvation.

How do we ensure that starvation will not occur? That is, how can we guarantee that resources will not always be preempted from the same process?

In a system where victim selection is based primarily on cost factors, it may happen that the same process is always picked as a victim. As a result, this process never completes its designated task, a starvation situation any practical system must address. Clearly, we must ensure that a process can be picked as a victim only a (small) finite number of times. The most common solution is to include the number of rollbacks in the cost factor.