CSS 430 Book Notes Chapter 9 Main Memory

9.1 Background

The main purpose of a computer system is to execute programs. These programs, together with the data they access, must be at least partially in main memory during execution.

Modern computer systems maintain several processes in memory during system execution. Many memory-management schemes exist, reflecting various approaches, and the effectiveness of each algorithm varies with the situation. Selection of a memory-management scheme for a system depends on many factors, especially on the system's hardware design. Most algorithms require some form of hardware support.

Background

Memory is central to the operation of a computer system. Memory consists of a large array of bytes, each with it's own address. The CPU fetches instructions from memory according to the value of the program counter. These instructions may cause other items to be loaded from or stored to memory.

Basic Hardware

Main memory and the registers built into each processing core are the only general purpose storage that a CPU can access directly.

Registers built into the CPU core are generally accessible in one cycle of the CPU clock. Main Memory however, is accessed via a transaction on the memory bus. Accessing this may take many CPU clock cycles. In these cases, the CPU often needs to stall since it cannot complete the instruction it is executing. To remedy this, the CPU will often place items in it's cache for faster access.

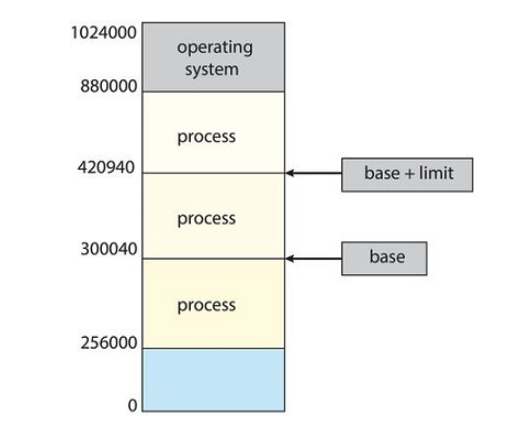

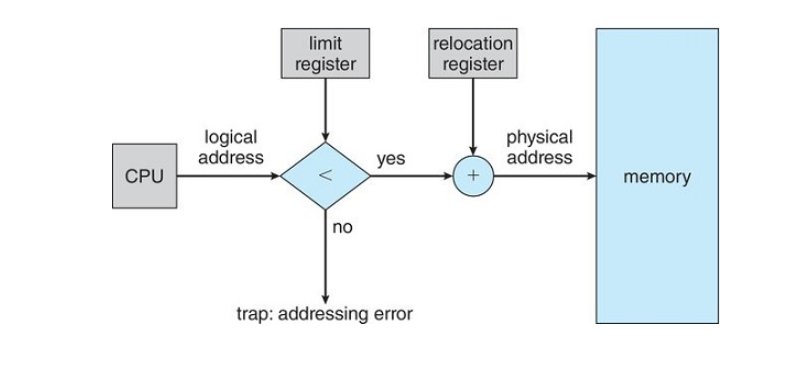

To ensure everything works properly, we need to make sure that each process has a separate memory space. To separate memory spaces, we need to determine the range of legal addresses that a process may access and to ensure that the process can only access these addresses. This can be done with two registers:

- Base Register: holds the smallest legal physical memory address.

- Limit Register specifies the size of the range.

For example, if the base register holds 300040 and the limit register is 120900, the program can access anything between 300040 and 420939(inclusive)

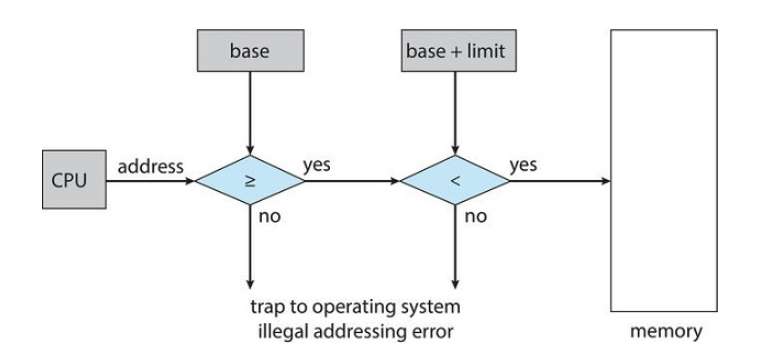

Protection of the memory space is accomplished by having the CPU hardware compare every address generated in user mode with the registers. Any attempt by a program executing in user mode to access operating system memory or other user memory will result in a trap which treats the attempt as a fatal error.

The base and limit registers can only be loaded by the operating system which does so through a privileged instruction.

Address binding

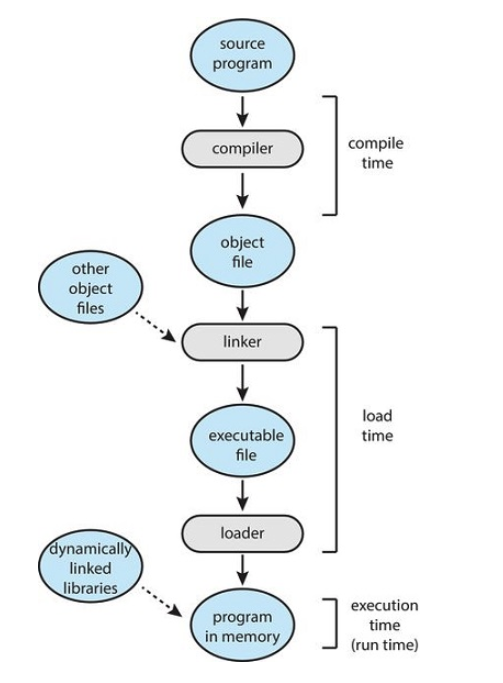

Usually, a program resides on a disk as a binary executable file. To run, the program must be brought into memory and placed within the context of a process where it can be allocated to an available CPU. As that process executes, it accesses instruction and data from memory. Eventually, the process terminates and its memory is reclaimed for use by other processes.

Most systems allow a user process to reside in any part of the physical memory. Thus, although the address space of the computer may start at 00000, the first address of the user process need not be 00000.

Addresses may be represented in different ways during these steps. Addresses in the source program are generally symbolic (such as the variable count). A compiler typically binds these symbolic addresses to relocatable addresses (such as "14 bytes from the beginning of this module"). The linker or loader then binds the relocatable address to absolute addresses. Each binding is a mapping from one address space to another.

Classically, the binding of instructions and data to memory addresses can be done at any step along the way:

- Compile time. If you know at compile time where the process will reside in memory, then absolute code can be generated. For example, if you know that a user process will reside starting at location

, then the generated compiler code will start at that location and extend up from there. If, at some later time, the starting location changes, then it will be necessary to recompile this code. - Load time. If it is not known at compile time where the process will reside in memory, then the compiler must generate relocatable code. In this case, final binding is delayed until load time. If the starting address changes, we need only reload the user code to incorporate this changed value.

- Execution time. If the process can be moved during its execution from one memory segment to another, then binding must be delayed until run time. Special hardware must be available for this scheme to work, as will be discussed in Section Logical versus physical address space. Most operating systems use this method.

Logical vs Physical Address Space

An address generated by the CPU is referred to as a Logical Address where as an address seen by the memory unit is referred to as a physical address

Binding addresses at compile time or load time generates identical logical and physical addresses, however, , the execution time address binding scheme results in differing logical and physical addresses. In this instance, the logical address is usually referred to as a virtual address. Often, logical and virtual addresses can be used interchangeably.

The set of all logical addresses generated by a program is the logical address space. The set of physical addresses is likewise known as the physical address space

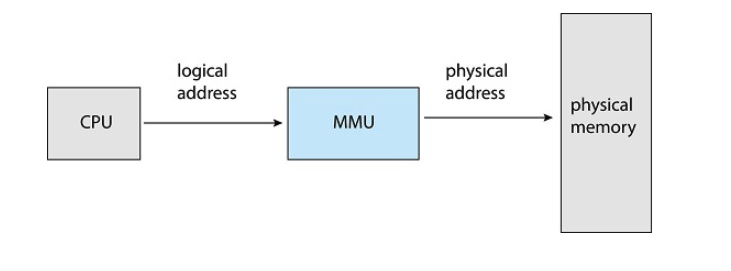

Runtime mapping from virtual to physical addresses is done by a piece of hardware called the memory-management unit or MMU

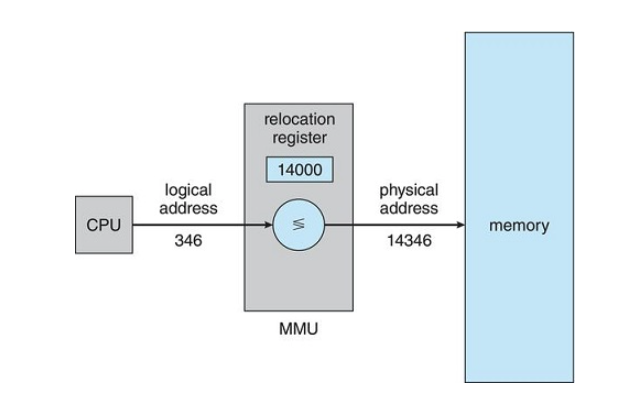

A generalized approach renames the base register to be known as the relocation register. The value in the relocation register is added to every address generated by a user process when that address is sent to memory. For example, if the base is at 14000, than an attempt by the user to address location 0 is dynamically located to address 14000, or, an access to location 346 is mapped to address 14346

The user program never actually accesses the real physical address. The program creates a pointer to location 346, manipulates it, compares it, stores it, does whatever...and it always thinks it's accessing location 346. Only when it's used as a memory address(in an indirect load or store for example) is it relocated relative to the base register. The user program deals with logical addresses then the hardware converts it to physical addresses.

We now have two different types of addresses: logical addresses (in the range 0 to max) and physical addresses (in the range

Dynamic Loading

The size of a process has thus been limited to the size of physical memory. To obtain better memory-space utilization, we can use dynamic loading. With dynamic loading, a routine is not loaded until it is called. All routines are kept on disk in a relocatable load format. The main program is loaded into memory and is executed. When a routine needs to call another routine, the calling routine first checks to see whether the other routine has been loaded. If it has not, the relocatable linking loader is called to load the desired routine into memory and to update the program's address tables to reflect this change. Then control is passed to the newly loaded routine.

The advantage of dynamic loading is that a routine is loaded only when it is needed.

In such a situation, although the total program size may be large, the portion that is used (and hence loaded) may be much smaller.

Dynamic loading does not require special support from the operating system. It is the responsibility of the users to design their programs to take advantage of such a method. Operating systems may help the programmer, however, by providing library routines to implement dynamic loading.

Dynamic Linking and Shared Libraries

Dynamically linked libraries (DLLs) are system libraries that are linked to user programs when the programs are run

Some operating systems support only static linking, in which system libraries are treated like any other object module and are combined by the loader into the binary program image. Dynamic linking, in contrast, is similar to dynamic loading. Here, though, linking, rather than loading, is postponed until execution time.

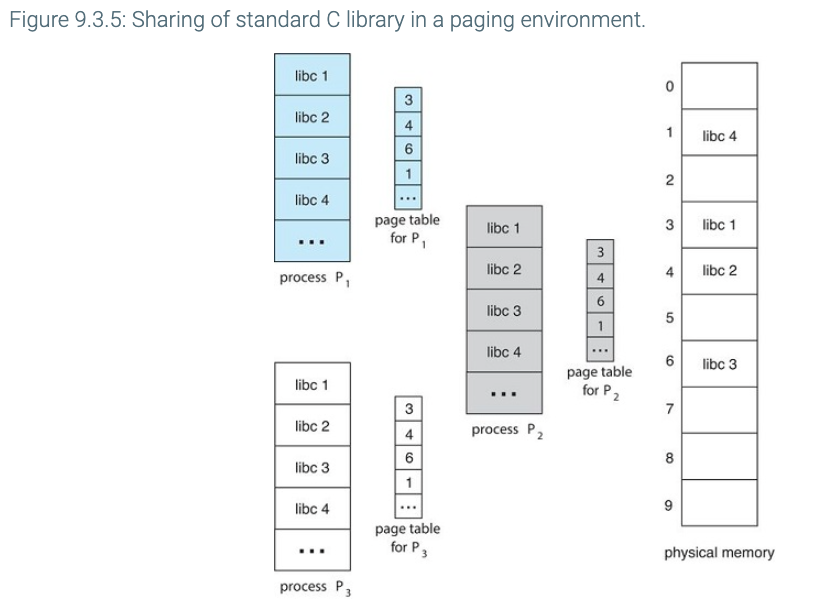

Without this facility, each program on a system must include a copy of its language library (or at least the routines referenced by the program) in the executable image. This requirement not only increases the size of an executable image but also may waste main memory. A second advantage of DLLs is that these libraries can be shared among multiple processes, so that only one instance of the DLL in main memory. For this reason, DLLs are also known as shared libraries, and are used extensively in Windows and Linux systems.

When a program references a routine that is in a dynamic library, the loader locates the DLL, loading it into memory if necessary. It then adjusts addresses that reference functions in the dynamic library to the location in memory where the DLL is stored.

Dynamically linked libraries can be extended to library updates (such as bug fixes). In addition, a library may be replaced by a new version, and all programs that reference the library will automatically use the new version.

Unlike dynamic loading, dynamic linking and shared libraries generally require help from the operating system. If the processes in memory are protected from one another, then the operating system is the only entity that can check to see whether the needed routine is in another process's memory space or that can allow multiple processes to access the same memory addresses.

9.2 Contiguous Memory Allocation

One early method of memory allocation was known as contiguous memory allocation. Each process was contained in a single section of memory that is contiguous.

Memory Protection

When the CPU scheduler selects a process for execution, the dispatcher loads the relocation and limit registers with the correct values as part of the context switch. Because every address generated by a CPU is checked against these registers, we can protect both the operating system and the other users' programs and data from being modified by this running process.

The relocation register scheme allows the operating system to dynamically change the allocated memory size.

Memory Allocation

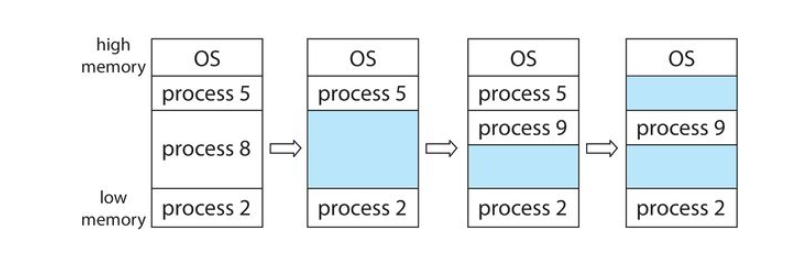

One of the simplest methods of allocating memory is to assign processes to variably sized partitions in memory, where each partition may contain exactly one process. This is known as variable-partitioning. In this system, the operating system keeps a table indicating which parts of memory are available and which are occupied.

As processes enter the system, the operating system takes into account the memory requirements of each process and the amount of available memory space in determining which processes are allocated memory. When a process is allocated space, it is loaded into memory, where it can then compete for CPU time. When a process terminates, it releases its memory, which the operating system may then provide to another process.

If there is insufficient memory to satisfy the needs of the arriving process, the operating system may reject the process or place it in a wait queue.

This procedure is a particular instance of the general dynamic storage-allocation problem, which concerns how to satisfy a request of size

-

First fit. Allocate the first hole that is big enough. Searching can start either at the beginning of the set of holes or at the location where the previous first-fit search ended. We can stop searching as soon as we find a free hole that is large enough.

-

Best fit. Allocate the smallest hole that is big enough. We must search the entire list, unless the list is ordered by size. This strategy produces the smallest leftover hole.

-

Worst fit. Allocate the largest hole. Again, we must search the entire list, unless it is sorted by size. This strategy produces the largest leftover hole, which may be more useful than the smaller leftover hole from a best-fit approach.

Fragmentation

First fit and best fit both suffer from external fragmentation. As processes are loaded and removed from memory, the space is broken into smaller and smaller pieces. When there is enough total memory space to hold a process, but no single block large enough, this is known as external fragmentation.

Memory fragmentation can be internal as well as external. Consider a multiple-partition allocation scheme with a hole of 18,464 bytes. Suppose that the next process requests 18,462 bytes. If we allocate exactly the requested block, we are left with a hole of 2 bytes. The overhead to keep track of this hole will be substantially larger than the hole itself. The general approach to avoiding this problem is to break the physical memory into fixed-sized blocks and allocate memory in units based on block size. With this approach, the memory allocated to a process may be slightly larger than the requested memory. The difference between these two numbers is internal fragmentation—unused memory that is internal to a partition.

9.3 Paging

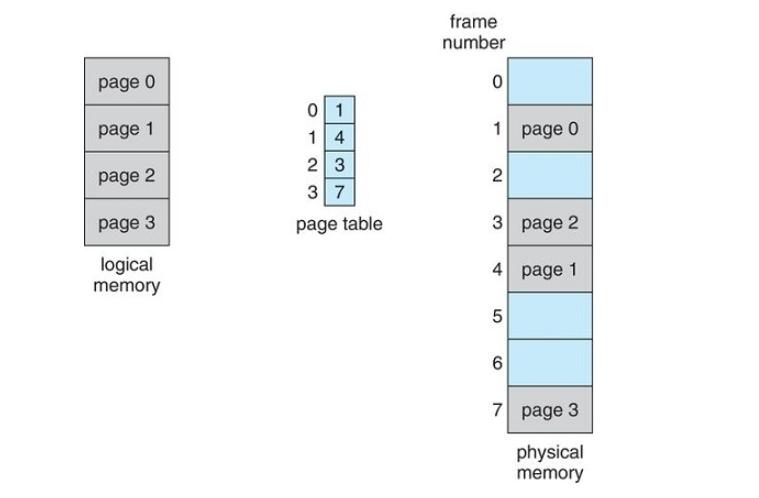

Paging is a memory management scheme that permits a process's physical address space to be noncontiguous. Paging avoids external fragmentation and the associated need for compaction.

Basic Method

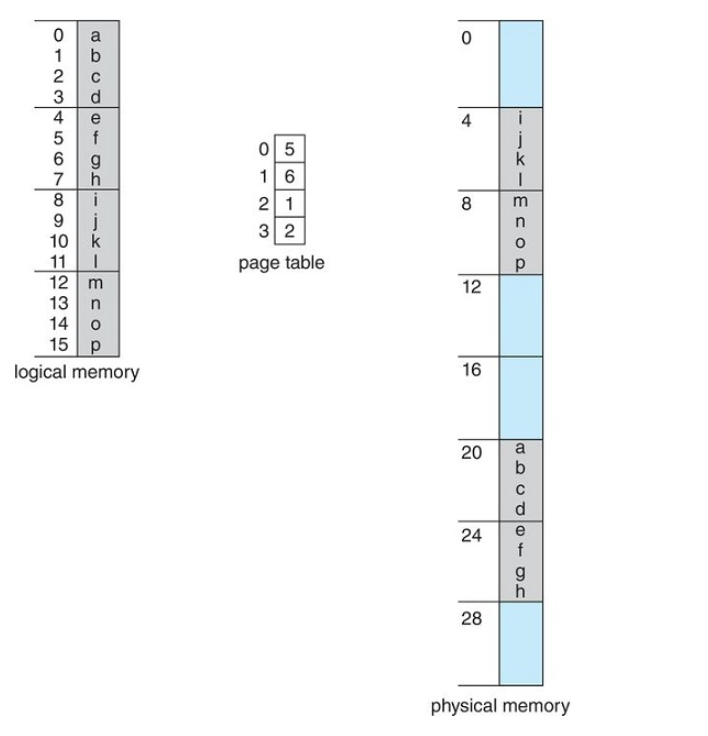

The basic method for implementing paging involves breaking physical memory into fixed-sized blocks called ** frames** and breaking logical memory into blocks of the same size called pages. When a process is to be executed, its pages are loaded into any available memory frames from their source (a file system or the backing store). The backing store is divided into fixed-sized blocks that are the same size as the memory frames or clusters of multiple frames. What this does is create a more useable memory space. It separates the logical address space from the physical address space. A process can have a logical 64-bit address space even though the system might only have

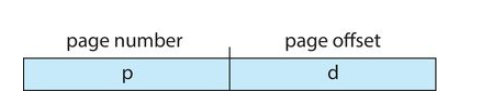

Each address generated by the CPU is divided into two parts, a page number and a page offset.

The page number is used as an index into a per-process page table. The page table contains the base address of each frame in physical memory, and the offset is the location in the frame being referenced. The base address of the frame is combined with the page offset to define the actual physical memory address.

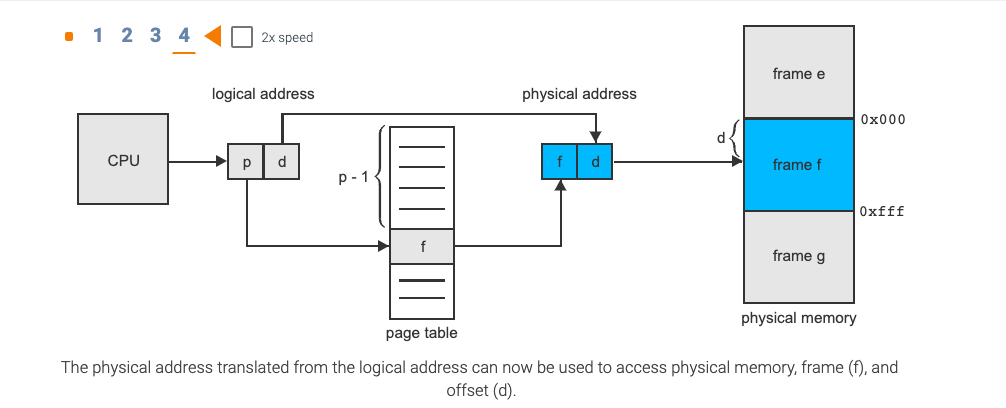

- The logical address generated by the CPU is sent to the MMU where it is divided into a page number (p) and an offset (d). The number of bits in each part depends on the page size.

- The page number is used as an index into the page table. The entry in the page table at that index is the number of the frame of physical memory containing the page. That frame number is the first part of the physical memory address.

- The offset (d) within the page is the same as the offset within the frame, so it is retained, together with the frame number forming the physical address.

- The physical address translated from the logical address can now be used to access physical memory, frame (f), and offset (d).

Frame sizes are always a power of 2, usually between 512 bytes and 16mb

The following outlines the steps taken by the MMU to translate a logical address generated by the CPU to a physical address:

- Extract the page number

and use it as an index into the page table. - Extract the corresponding frame number

from the page table. - Replace the page number

in the logical address with the frame number . - As the offset

does not change, it is not replaced, and the frame number and offset now comprise the physical address.

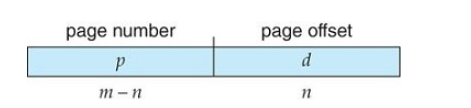

The page size (like the frame size) is defined by the hardware. The size of a page is a power of 2, typically varying between 4 KB and 1 GB per page, depending on the computer architecture. The selection of a power of 2 as a page size makes the translation of a logical address into a page number and page offset particularly easy. If the size of the logical address space is, and a page size is bytes, then the high-order bits of a logical address designate the page number, and the low-order bits designate the page offset. Thus, the logical address is as follows:

where

For example:

Here, in the logical address,

When we use a paging scheme, we have no external fragmentation: any free frame can be allocated to a process that needs it. However, we may have some internal fragmentation.

f the memory requirements of a process do not happen to coincide with page boundaries, the last frame allocated may not be completely full. For example, if page size is 2,048 bytes, a process of 72,766 bytes will need 35 pages plus 1,086 bytes. It will be allocated 36 frames, resulting in internal fragmentation of 2,048 − 1,086 = 962 bytes. In the worst case, a process would need

Frequently, on a 32-bit CPU, each page-table entry is 4 bytes long, but that size can vary as well. A 32-bit entry can point to one of

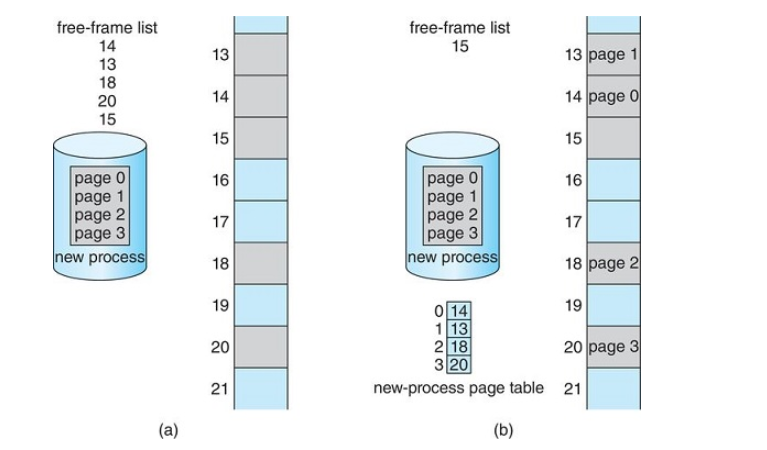

When a process arrives in the system to be executed, its size, expressed in pages, is examined. Each page of the process needs one frame. Thus, if the process requires

Free frames (a) before allocation, and (b) after allocation

Since the operating system is managing physical memory, it must be aware of the allocation details of physical memory—which frames are allocated, which frames are available, how many total frames there are, and so on. This information is generally kept in a single, system-wide data structure called a frame table. The frame table has one entry for each physical page frame, indicating whether the latter is free or allocated and, if it is allocated, to which page of which process (or processes).

As page tables are per-process data structures, a pointer to the page table is stored with the other register values (like the instruction pointer) in the process control block of each process. When the CPU scheduler selects a process for execution, it must reload the user registers and the appropriate hardware page-table values from the stored user page table.

Hardware support

The hardware implementation of the page table can be done in several ways. In the simplest case, the page table is implemented as a set of dedicated high-speed hardware registers, which makes the page-address translation very efficient. However, this approach increases context-switch time, as each one of these registers must be exchanged during a context switch.

If a page table is small, this works, however, if it's large, like most modern systems, a machine may use a page table in main memory and a page-table base register is used that points to the page table.

Translation Look Aside Buffer

Because storing a page table in main memory may be slow due to memory access times, a solution was created to deal with this. A small hardware cache called a translation look-aside buffer(TLB) may be used. Each TLB entry consists of a key-value pair. When the memory is presented with an item, the item is compared with all keys simultaneously. If the item is found, the corresponding value field is returned.

Protection

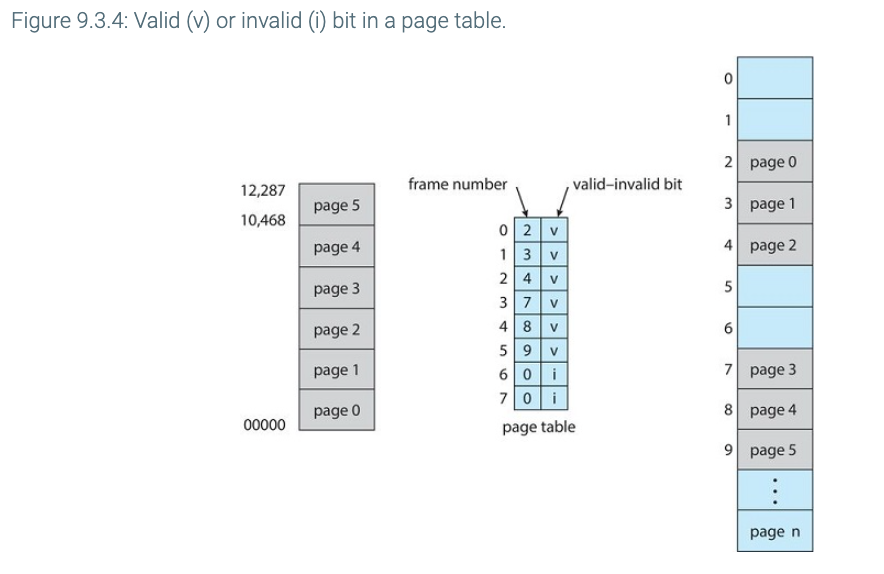

Memory protection in a paged environment is accomplished by protection bits associated with each frame. Normally, these bits are kept in the page table.

One bit can define a page to be read-write or read-only. Every reference to memory goes through the page table to find the correct frame number. At the same time that the physical address is being computed, the protection bits can be checked to verify that no writes are being made to a read-only page. An attempt to write to a read-only page causes a hardware trap to the operating system (or memory-protection violation).

We can create hardware to provide read-only, read-write, or execute-only protection; or, by providing separate protection bits for each kind of access, we can allow any combination of these accesses. Illegal attempts will be trapped to the operating system.

One additional bit is generally attached to each entry in the page table: a valid-invalid bit. When this bit is set to valid, the associated page is in the process's logical address space and is thus a legal (or valid) page. When the bit is set to invalid, the page is not in the process's logical address space. Illegal addresses are trapped by use of the valid-invalid bit. The operating system sets this bit for each page to allow or disallow access to the page.

Shared Pages

9.5 Swapping

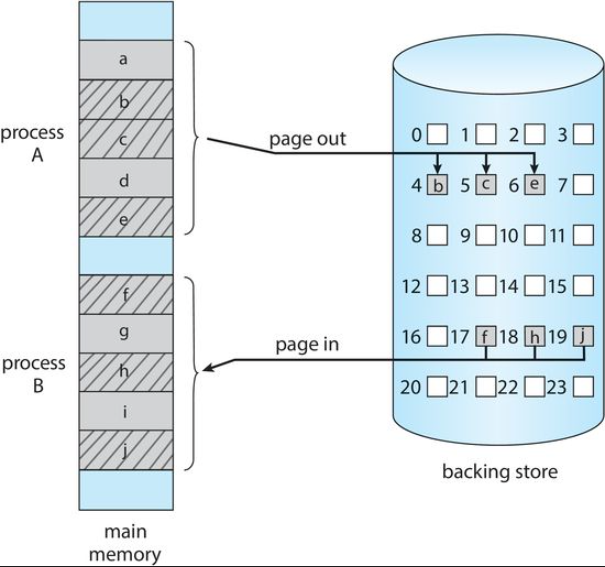

Process instructions and the data they operate on must be in memory to be executed. However, a process, or a portion of a process, can be swapped temporarily out of memory to a backing store and then brought back into memory for continued execution.

Swapping makes it possible for the total physical address space of all processes to exceed the real physical memory of the system, thus increasing the degree of multiprogramming in a system.

Standard Swapping

Standard swapping involves moving entire processes between main memory and a backing store. The backing store is commonly fast secondary storage. It must be large enough to accommodate whatever parts of processes need to be stored and retrieved, and it must provide direct access to these memory images. When a process or part is swapped to the backing store, the data structures associated with the process must be written to the backing store. For a multithreaded process, all per-thread data structures must be swapped as well. The operating system must also maintain metadata for processes that have been swapped out, so they can be restored when they are swapped back in to memory.

Standard swapping allows physical memory to be "over subscribed"..the system can accommodate more processes than there is actual physical memory to store. Idle processes are the best candidates to swap

Swapping with Paging

Most systems, including Linux and Windows, now use a variation of swapping in which pages of a process—rather than an entire process—can be swapped. This strategy still allows physical memory to be oversubscribed, but does not incur the cost of swapping entire processes, as presumably only a small number of pages will be involved in swapping. In fact, the term swapping now generally refers to standard swapping, and paging refers to swapping with paging.

A page out operation moves a page from memory to the backing store; the reverse process is known as a page in.